Three lessons from a man who averted nuclear war by not trusting a computer

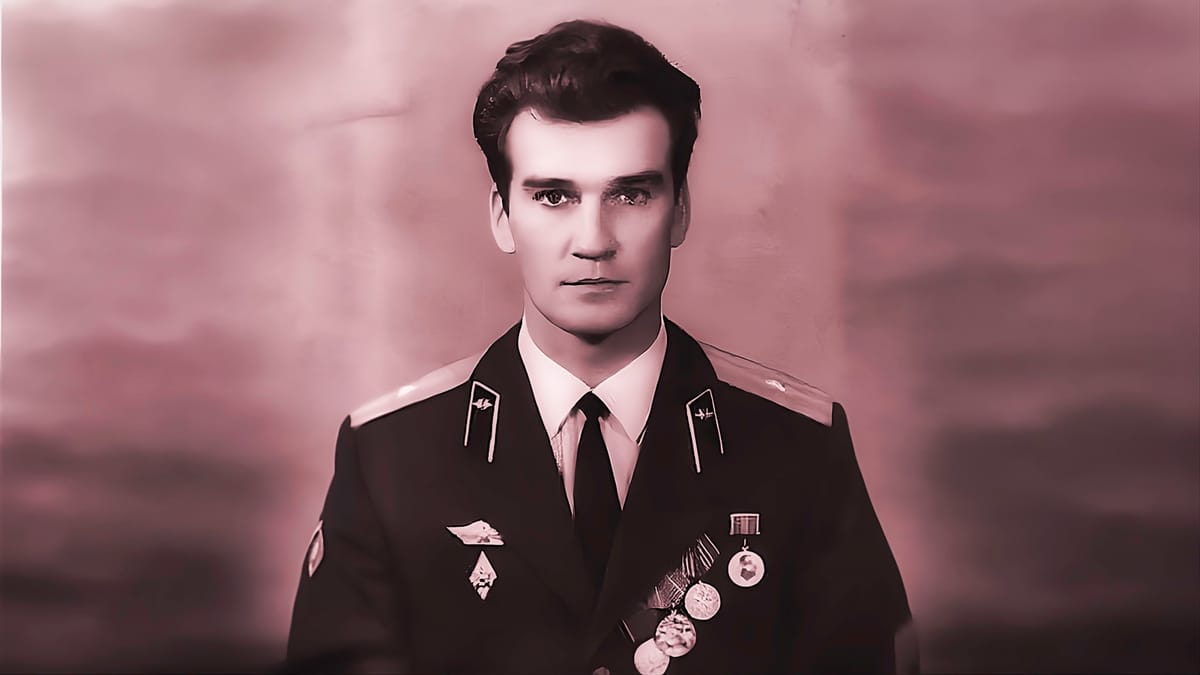

On September 26, 1983, Stanislav Petrov made the correct decision to not trust a computer. The early warning system at command center Serpukhov-15, loudly alerting of a nuclear attack from the United States, was of course modern and up-to-date. Stanislav Petrov was in charge, working his second shift in place of a colleague who was ill. Many officers facing the same situation would have called their superiors to alert them of the need for a counter-attack. Especially as fellow officers were shouting at him to retaliate quickly before it was too late. Petrov did not succumb.

Watch this reenactment from the documentary The Man Who Saved the World to get a feeling for the seriousness of the situation he found himself in:

I've shared Petrov's story before. A huge number of people have him to thank for their lives. And yet he remains to the general public largely unknown. Truly, if we are to avoid placing false and misguided trust in machines, algorithms and AI we need to be learning from people like Stanislav Petrov.

As you know, the computer was indeed wrong about the imminent attack and Petrov likely saved the world from nuclear disaster in those impossibly stressful minutes, by daring to wait for ground confirmation. For context one must also be aware that this was at a time when US-Soviet relations were extremely tense.

These are three learnings I take away from his story.

1. Embrace multiple perspectives

The fact that it was not Stanislov Petrov's own choice to pursue an army career speaks to me of how important it is to welcome a broad range of experiences and perspectives. Petrov received an education as an engineer rather than a military man. He knew the unpredictability of machine behavior.

Leadership generally lauds the infallibility of automated machines they have invested money and resources in. And military personnel may be taught to act according to procedure based on the instructions of machines, or risk punishment. The engineer, on the other hand, knows instinctively about the intrinsic risks of unexpected behavior and false positives in machines.

Speaking about the man he was filling in for at the time of the incident, Petrov said:

"He was just a military guy. He was ill or something. The guys in the army that just have guns in their hands don't think. They accept orders. My weapon was my brain and that's the big difference."

While a very prejudiced comment by today's standards it is a reflection of the mindset he experienced on location.

2. Look for multiple confirmation points

Stanislav Petrov understood what he was looking for. While he has admitted he could not be 100% sure the attack wasn't real, there were several factors he has mentioned that played into his decision:

- He had been told a US attack would be all-out. An attack with only 5 missiles did not make sense to him.

- Ground radar failed to pick up supporting evidence of an attack, even after minutes of waiting.

- The message passed too quickly through the 30 layers of verification he himself had devised.

On top of this: The launch detection system was new (and hence he did not fully trust it).

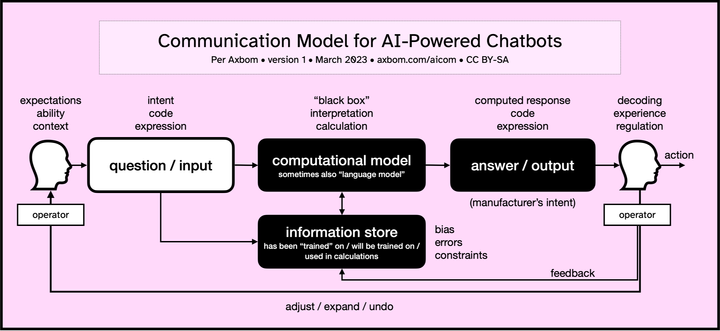

To confirm our beliefs we should expect many different variables to line up and tell us the same story. If one or more variables are saying something different, we need to pursue those anomalies to understand why. If the idea of a faulty system lines up with all other variables, that makes it more likely.

3. Reward exposure of faulty systems

If we keep praising our tools for their excellence and efficiency it's hard to later accept their defects. When shortcomings are found, this needs to be communicated just as clearly and widely as successes. Maintaining an illusion of perfect, neutral and flawless systems will keep people from questioning the systems when the systems need to be questioned.

Stanislav Petrov received no reward from the military for his actions. At one point he was actually reprimanded for not properly writing about the incident in the war diary.

Petrov himself said there was no reward precisely because the event uncovered bugs in the missile detection system. Rewarding him would be an embarassment for his superiors and the responsible scientists. And bringing attention to his actions would mean that someone, likely a superior, would have to be punished.

We need to stop punishing when failure helps us understand something that can be improved.

Petrov passed away on in May of 2017, but we shouldn't stop learning from one of the most momentous decisions in human history. Petrov himself would of course never claim to be a hero of any sort:

All that happened didn't matter to me—it was my job. I was simply doing my job, and I was the right person at the right time, that's all. My late wife for 10 years knew nothing about it. 'So what did you do?' she asked me. 'Nothing. I did nothing.' – Stanislav Petrov

In this quote he also alludes to the fact that he was not allowed to tell his wife, who suffered from cancer, about the incident for 10 years. When the world finally learned about Petrov's actions, the outside world of course started taking notice. Among the awards and commendations he received outside the Soviet Union/Russia are:

- 2004: The San Francisco-based Association of World Citizens gave Petrov its World Citizen Award.

- 2006: Honored in a meeting at the United Nations in New York City. The Association of World Citizens presented Petrov with a second special World Citizen Award.

- 2012: Received the 2011 German Media Award, presented to him at a ceremony in Baden-Baden, Germany

- 2013: Petrov was awarded the Dresden Peace Prize in Dresden, Germany, on February 17.

- 2018: Posthumously honored in New York with the $50,000 Future of Life Award.

Watch the documentary on YouTube or find on a streaming service.

Comment