Many people are telling me how their companies are running tests of AI tools. This in itself is not strange at all. It's probably difficult as a leader today not to feel stressed by the manufacturers of AI promising revolutionary abilities that will radically change all of business as we know it. Promises that also tend to get big headlines in the news. There is an understandable sense of responsibility to get a handle on the situation.

However, these "trials" take place with varying degrees of instructions and frameworks. The "hurry-hustle-scurry" factor often leads to tunnel vision. Businesses become intimidation-driven rather than data-informed. The testing may, for example, lack the support needed to understand the tools – as well as any guidance on how the evaluation should be documented.

This template can help. Think of it as a way of taking a deep breath before making important decisions.

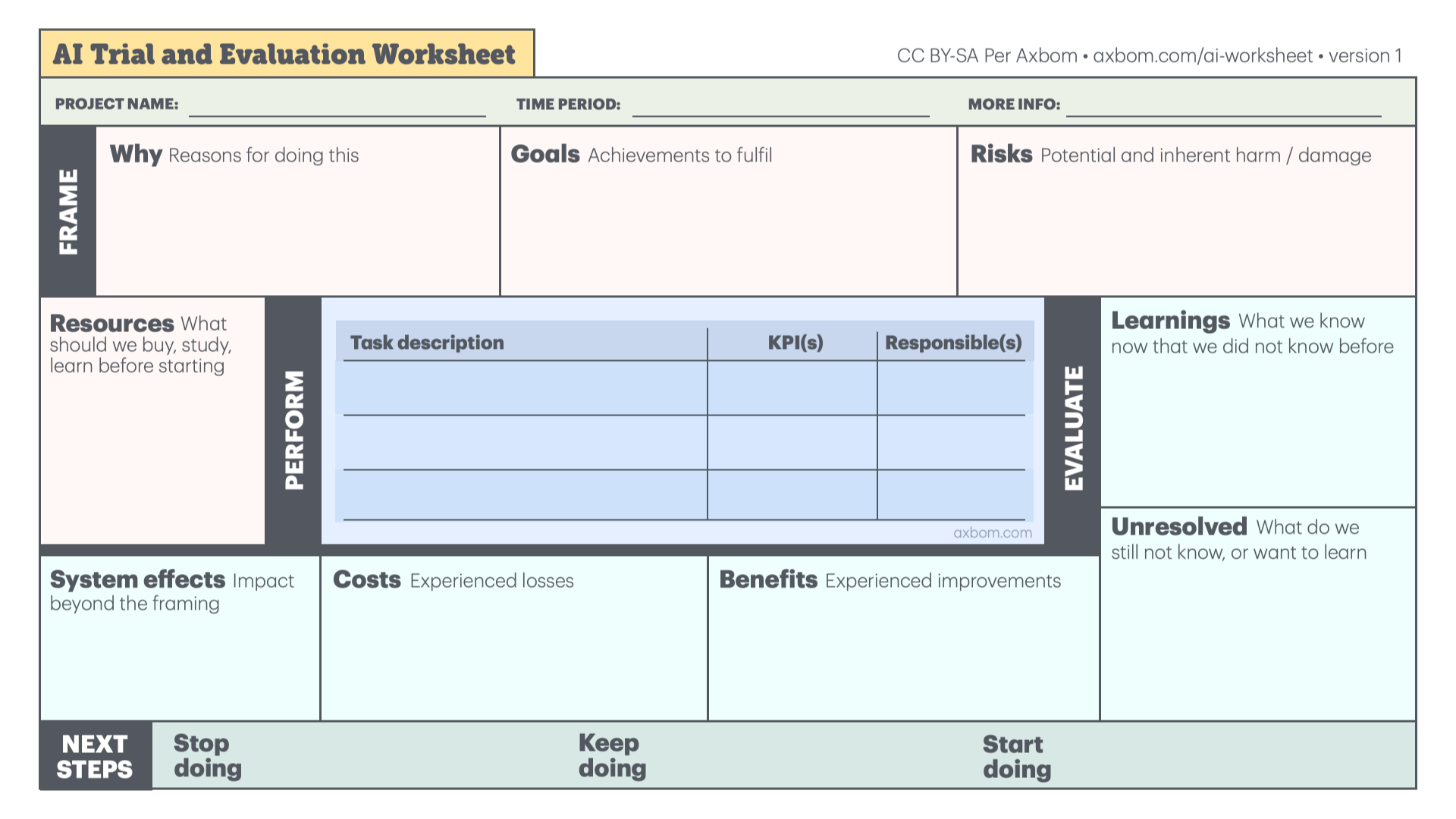

The AI Trial and Evaluation Worksheet gives you a structure for questions and topics that should be part of an AI analysis. Everything from necessary preparation to steps for promoting change.

You can download the template here in PDF and PNG format. An easy way to use it is to mount it as a backdrop in whiteboard tools like Miro, Mural or Figma. You can then have brainstorming sessions with digital 'sticky notes'.

To further help out, I've written briefly about each area below. Note that the template is intended to be used throughout the trial period, and not just in one workshop session.

Worksheet walkthrough

About the project

- Project name. Decide on and write down a name for the trial project for easy reference.

- Time Period. Enter the start and end dates for the trial.

- More info: Enter a contact person or web address for further information about the project.

Frame

Questions and considerations that should be in place before the project starts, or at its very start.

Why – Reasons for doing this

What drives us to want to do an evaluation of AI. Is it specific expectations, a sense of urgency or something else. By being honest about the driving force it becomes easier at a later stage to better understand whether that driving force was justified.

Goals - Achievements to fulfil

Be as clear as possible about the types of goals the organization has in mind. For example, if certain professions are to work faster, if the quality of some work is expected to increase or if you are to start doing something you have not done before. It doesn't need to be in too much detail yet - details will come later when you set KPIs. The common denominator should be an improvement or change that is considered beneficial for the organization.

Risks – Potential and inherent harm / damage

In order to evaluate safely, it's necessary to discuss in advance the risks that AI usage, and perhaps above all generative AI, can pose. This can be about prejudices that are reinforced, summaries that turn out misleading or information used without permission.

As discussion material for such an exercise, you may for example use my diagram The Elements of AI Ethics. By addressing these early, you can mitigate harmful outcomes.

Resources - What should we buy, study or learn before starting

When preparing a trip you must also pack what you need to get by, as well as understand what to expect ahead of you. How well does everyone understand the technology to be tested? Do you need to take a course beforehand, or is there reading material in the form of articles or books to prepare with? Given what you want to achieve, there might be specific add-ons, products or software that you want to procure.

Make sure you have what you need to make the journey easier and avoid having to cancel it half-way through. Does everyone even agree on what "AI" means?

Perform

For the actual testing of the AI tool, do not try to do or evaluate too many things at once. Too big of a bite risks making it difficult to assess how well a new tool fits with everyday operations. It's also likely that these trial activities take place in parallel with carrying out regular work tasks. If you take on too much, either the everyday work suffers or the evaluation turns out half-assed.

Task description

In the template there is room for three activities under "Task description". Here is where you describe what the organization, or selected individuals, do with the new tool that is expected to lead to the fulfillment of the goal or goals prioritized in the Goals section. If you judge that you want to carry out and map more activities than that, I suggest recreating this part into an extended table.

KPI(s)

As you're aware, KPI stands for Key Performance Indicator and again: it's important not to try to measure too much. A rule of thumb is to keep it to three or fewer indicators per activity. Indicators can for example pertain to time savings, satisfaction (internal or external), error reduction, increased customer engagement, reduced waiting time, and more. This must of course be linked to the goals you described earlier.

As usual when it comes to running workshops, I recommend an open brainstorm activity, which then goes on to limit the number of KPIs through discussion and possibly also a final dot voting exercise (all participants get 3-5 points to distribute to their preferred KPIs).

Responsible(s)

It should of course also be clear who is responsible for measuring each KPI, ensuring follow-up does not fall through the cracks. The measurements themselves are carried out and documented in a manner deemed appropriate by each respective responsible person.

Evaluate

Now we are in the phase intended for a careful compilation of what we have learned, costs and benefits, and considerations of unintended consequences the activites may lead to. If we have not received answers for all our questions, or new questions have appeared, this will also be clarified.

Learnings - What do we know now that we did not know before

In addition to the reporting on KPIs, there is room here for participants to share a myriad of perspectives that they may have brought with them from their work during the course of the project. This can be an extremely rewarding activity if you allow the highlighting of concrete events as well as more emotional experiences. For example, how the participants have challenged their own preconceived notions about the technology.

System Effects - Impact beyond the framing

It's important to note that the use of new technology can also have an impact in ways that are unpredictable, but which can be uncovered with vigilance. One department's ability to work faster may for example create a higher workload for someone else down the production line. Something that is perceived as better can therefore still contribute to a poorer result.

I also recommend that you acknowledge broader effects of the tools you choose to rely on. For example, there is increased reporting on how AI models require enormous amounts of energy in both production and use. For many organizations, it may be worth reflecting on whether one wants to become dependent on tools that contribute to a bigger carbon footprint, when the same activities can be done in more energy-efficient ways with satisfactory results.

Something that may be worth highlighting are also the consequences that often become apparent only after a longer period of use, such as the need for increased storage space or if the tools gain unforeseen access to documents with sensitive information that then become available in responses.

Also read: Explaining responsibility, impact and power in AI.

Costs - Perceived losses

Space to bring up costs of various kinds that are associated with the use of the new technology.

Benefits - Perceived benefits

Space to bring up benefits of various kinds that are connected with the use of the new technology.

Unresolved – What do we still not know, or want to learn

In the evaluation, it is valuable to note things that are still question marks, if new uncertainties have sprung up and any insights about what needs to be understood better before moving forward. It is not unreasonable for one evaluation to be followed by another that is even more focused on a particular area of activity.

Next steps

We get the greatest benefit from the trial through joint decisions about how the organization should move forward. This is useful and valuable even if the expected benefits from the trial weren't realised. An understanding of what does not work is also a new understanding, and helps the organization in future decision-making.

Remember that experiments of this type never fail - you always learn something new. At least if you use this template. 😉

Here I recommend a simple and popular disposition that I myself use in many different contexts to assemble an action plan after acquiring new knowledge - even after something as simple as reading a book.

Stop doing

Is there something I/we are already doing that doesn't have the kind of effect we hope for, or costs more than is called for? This will generate a list of activities that should cease.

Keep doing

Is there something I/we are already doing that produces valuable results with a reasonable work effort – or contributes to well-being, for example? This will generate a list of activities that we continue to carry out as part of our regular work.

Start doing

What have we learned about new ways of doing things that we can use going forward to create value for the organization and/or ourselves? This will generate a list of things that we want to start doing and haven't done before.

Be careful of adding a significant number of activities without also removing some.

In summary

If you and your organization use this template you have a dependable platform for learning in the process of testing new tools, and a framework for sharing and applying those learnings. But all organizations are of course different. You're welcome to adapt and shape the template according to your own needs.

I have applied the license CC BY-SA, which means that you can use it, share it and adapt it however you want as long as you credit me as the creator and don't change the license. A good practice is also to add a link to this page. When I update the template, I always post the new version here.

Do let me know if you make use of it and how your trials turn out. And if you need a workshop leader, you know where to find me.

Interested in more tools and ideas for strategic foresight and ethical considerations? I wrote a small handbook with practical advice back in 2019:

Reading tips

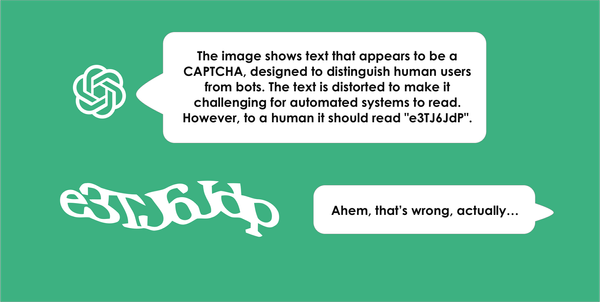

- arXiv. FABLES: Evaluating faithfulness and content selection in book-length summarization. A research study showing the risks of relying on summaries made with language models.

- Baldur Bjarnason: The LLMentalist Effect: how chat-based Large Language Models replicate the mechanisms of a psychic's con. An article from July 2023 that has received a lot of attention in the past week. It explains the mechanisms that lead people to believe in the psychic abilities claimed by mediums and psychics, and shows how the same mechanisms lead people to believe in the magical abilities of language models (chatbots like ChatGPT).

- The Markup: AI Detection Tools Falsely Accuse International Students of Cheating. About how people with English as a second language are more often falsely flagged – by automated systems – as having cheated with the help of AI.

Member discussion