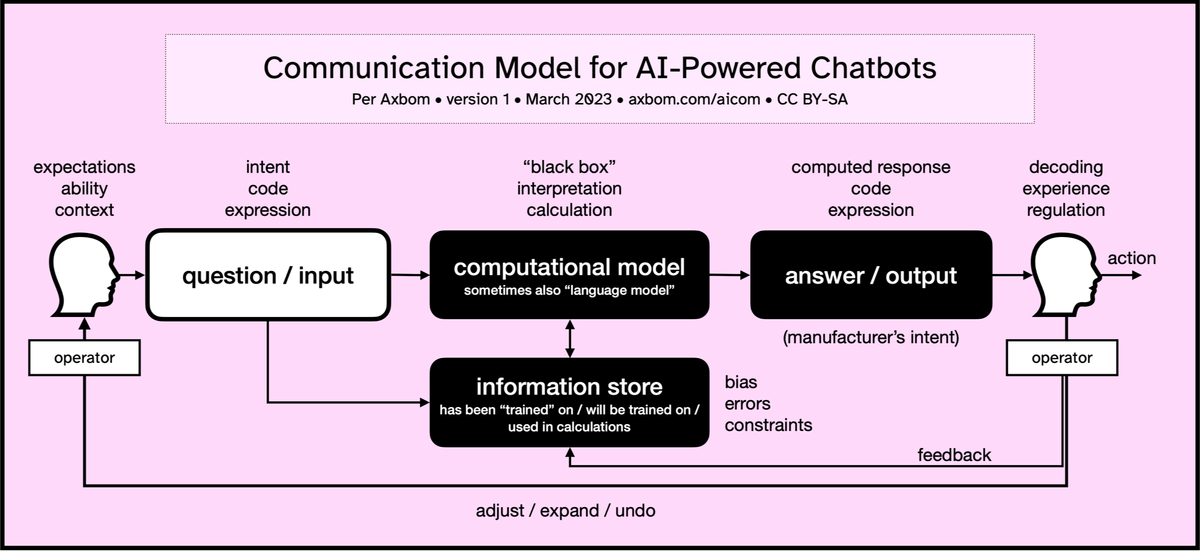

Communication Model for AI-Powered Chatbots

Improve the design of AI tools for ease-of-use and trustworthiness.

I have developed this communication model as a guide for conversations and strategic work aimed at improving AI tools. Note that the focus here is on human interaction with the tool. It is about the components that affect input data (from the human) and output data (from the computer), and by extension how a human chooses to act based on the output data.

The diagram contributes a communication science perspective to better understand when, where and how in the use and application of these AI tools that one can make an effort to improve for example

- comprehensibility,

- satisfaction and

- benefit.

It is useful in implementation planning, design decisions, communicative efforts and training.

What follows is a summary of the various components. I start on the left side of the model, with an operator who wants to accomplish a task. The word operator is used here to describe a human who controls an interaction with a machine.

Although many examples are based on large language models, this communication model works for all systems where a human asks a question, or gives an instruction, to a computational model.

The humanity of the operator

When a human is to interact with a computer, we need to keep in mind several aspects of the human's circumstances:

- Expectations. How have the person's expectations been formed and how well do they match what is possible in the given situation? How can we help keep these expectations at a good level?

- Ability. Given the person's experience, knowledge and physical and cognitive capacity, how well equipped is the person to interact with the computer - for example, when it comes to phrasing inputs and prompts in a way that makes the computer produce a result they are satisfied with. How do we ensure that the tool is adapted to the operator's ability?

- Context. What context is the person currently in and might this, for example, affect their ability to act as they themselves would like. How do we work with understanding context and taking this into account?

Input of question, or input data

When the person is asked to enter their question, request – or prompt – it is often done in a way that leaves room for interpretation.

- Intent. Does the person have an intent that we can subsequently check and verify. How do we help the operator phrase their intent?

- Code. Can the person encode their intention, linguistically, in a way that makes it, for example, distinct or useful to the computational model in a way that minimizes ambiguity. What support can help make the coding of the question more accurate?

- Expression. Does the input contain an expression that can affect the computational model in any direction? For example, this could pertain to formal, casual or childish language. How permissive or guiding should the tool be regarding these expressions?

The computational model starts its process

In the computational model itself – designed to accept the human input – many things are of course happening. Some of the most important aspects to keep in mind are:

- "Black Box". It is hidden from the human how this works. Much is left to imagination and expectations built up by external circumstances. Even if the model used is released as open source, most people don't have the ability to look at that code and understand what's going on. You have to build your own story in your head to deal with the uncertainty. Which stories can be helpful and which stories mislead unnecessarily?

- Interpretation. There is a machine interpretation of the human phrasing, but not in the way that one human interprets another. When we are talking about a language model, some form of statistical analysis is generally used to simply calculate what are the most likely words and sentences that fit with what the person has entered. This is based on the data used to "train" the model. How confident are we with the interpretation for the purpose we want the tool to work?

- Computation (over truth). A calculation of the most probable sentences means, among other things, that even if the model has been "trained" on – and only been given access to – pure factual texts, it will find combinations of sentences that are probable but not necessarily true. There is no active check to increase the truth of the computation, and truth is not something that can be determined by the model on its own. The AI model is a computer program that follows instructions. Do we have a good grasp of what the computation actually does so that we do not promise things it doesn't, and can not, do?

One way to describe this is that language models are often optimized so that the answers are perceived as true, but not that they are true. It is a language model, not a knowledge model. The manufacturers often prefer the answers to also be experienced as if they were written by a human... but we already know that is not true at all.

Consideration of the information store

The information upon which the computational model was "trained" is relevant. In the communication model, this is noted in the information store box, which also includes other information that can potentially contribute to the computational model.

When hearing stories about ChatGPT, it can sometimes sound like the tool has access to the entire Internet as a source of information for providing answers. But of course it's nothing like that. It is not possible to compress all the content of the Internet in a database and let a computational model ask questions of the database. However, the tool may have been trained on large amounts of content found on the Internet.

The AI tool has been fed with these texts so that the computational model will receive more data to adjust how computations should be processed, and at best become more reliable, at least statistically. In the case of a language model, it is these calculations that select words so that the tool can imitate texts written by a human. Being "trained" with large amounts of information makes the computational model theoretically more adept at imitating human conversation and human writing.

The term Large Language Models (LLM) comes from the fact that the amount of information they were trained with is just this: very large. The idea is that the larger amount of information used, the "better" predictions the language model will make when it has to determine which answer, or output from the model, matches the question, or the input.

Similarly, we can see that tools that generate art can do so in a way that becomes more believable to humans the more human artworks the computational model is trained on.

An example of an area where language models are clearly useful is speech recognition. Some words or concepts are pronounced differently by different people, or can be drowned out by background noise. A language model can then help determine the probability that certain words are being said, based on all the others words being said, assisted by a computational model that has been trained on a lot of information. The purpose of the language model in this case is to completely copy what the human says and transform it into text. It becomes very clear what the person's intent is, and it is possible to immediately verify whether the result matches both code and expectations.

It is different with language models where the goal is conversation, or where the goal is not very clearly defined. Then all sorts of problems can arise.

The following parameters do not always imply errors, but may be relevant depending on the purpose of a computational model. When you understand the enormous amount of information that a model is trained on, you also realize that the people who manufacture the model obviously do not have the opportunity to read the content themselves and somehow value its relevance. In the industry this is called unsupervised learning.

- Bias. The texts that are used may contain an enormous amount of prejudice of various kinds, including extreme opinions and incitement against ethnic groups. It is not only about overt racism but also more subtle inferences – that men deserve more pay than women, for example. In fact, given the sheer amount of content that AI tools sift through, these skewed views can be picked up by material that many people would at first glance consider neutral.

Since a lot of material is needed for a model to become "better", this always means that a great deal of historical material is used, where naturally there are biases. Societal values change over time but in old material they are locked in the era in which they were produced. - Errors. Of course, clear factual errors are also found in the material that is used for training. But it's important to remember that this is not primarily why language models can so often give wrong answers. Wrong answers are given because the models do not aim to generate facts, but aim to imitate human language. But just as a flat world map can contribute to a distorted understanding of what our planet looks like, inaccuracies expressed as truths for decades can contribute to these appearing in statistical calculations.

For example, art forms originating in what is now Nigeria have long been attributed in European writing to Greek origins. It is in itself prejudices that lead to assumptions that lead to fallacies expressed as truth. Many such patterns, such as a historical reluctance to name African artists, can lead to a language model being unable to find statistically reliable ways to describe art and other culture of African origin. There are far more texts that identify Yoruban metal sculptures as Greek, although in recent years there has been a better understanding and the correct origin is given. So purely statistically - if you look at the amount of texts asserting the false perception - the sculptures are Greek. - Constraints. We know that it is not the entire Internet that is used as an information source. Constraints have to be in place. Someone selects sources of information. Someone chooses a language. Someone chooses time periods for the source material, because it has to start and end somewhere. And all these constraints, of course, create their own biases because you can never claim that the source (especially in large models) is either representative or neutral.

Today there are more than 7,000 languages and dialects in the world. Only about 7% of these are reflected in online material. About 98% of web pages on the Internet are published in only 12 languages, and more than half of these are in English.

About 76% of internet users live in Africa, Asia, the Middle East, Latin America and the Caribbean – but the majority of content comes from elsewhere. On Wikipedia, for example, more than 80% of the content comes from Europe and North America.

Weaknesses in the information store can lead to the erasure of entire regions, cultures and languages from being represented in probable answers.

When using AI tools, one must pay attention to their weaknesses and how old values can be reinforced by them.

The response is presented

After the calculation is completed, the answer is presented to the operator. It is important to remember now that here too many important decisions are made by the manufacturer. There is not only one way to present answers, but of course an infinite number of ways. It is important to try and find out the manufacturer's intent with the method that has been chosen.

- Computed response. Are we getting any clues as to why the output looks the way it does and how the query itself affected the output? For those who want to understand not only the answer, but also the reason for the answer, there is an opportunity here to give information about how well the answer fits the statistical model, or ask for more information to supplement and improve. It's unfortunately rare that we see this form of transparency and support. Of course, this also reminds us that we are dealing with a machine, and if the manufacturer wants to hide that fact they also want to hide everything that reminds the operator of this. Humans and AI are often talked about as entities that should work together for the best results, and there are design decisions to be made here that can invite that collaboration. What parts of the computation are relevant for the operator to understand and know about?

- Code. Again, the answer, or output, must also be presented in a way that is easy to absorb but also matches the input and purpose of the tool. At best, we can verify that the output is reasonable and relevant based on the input. The weakness is that an incredibly well-formulated answer (human impersonation) can in itself contribute to the fact that the validity of the answer is not as easily questioned. If there are also no visual signals about how the answer is calculated, there is even less incentive to question it. How can the code be adapted to make the operator's interpretation relevant and useful?

- Expression. In the same way that humans can write in a particular manner, so too can the output match it. Here, the manufacturer can choose to match the input, follow given instructions or find an entirely own tonality. Microsoft's latest AI tool was criticized for adding a lot of emoji symbols to its responses. It is of course the manufacturer's decision that the expression looked that way, and it provides a good example of how expression is a large part of how the response is received. What level of self-expression is appropriate given the tool's scope of use?

Managing the response

The operator who now receives the answer must judge for themself its relevance and credibility.

- Decoding. Of course, being able to read the answer and understand all its components requires that the answer reveals all of this. Remember: the decoding is not only about being able to read and understand the text of a given answer, for example. It is also about forming an idea of how the answer provides value based on the question, or if it does not live up to expectations at all. There is a balance between simplifying and offering everything. What support can be offered when the operator decodes to better understand what is doubtful and what is definite in the output?

- Experience. What one feels and perceives, as an effect of how the answer looks and is presented, also plays a role in trust and satisfaction. When the decoding exceeds expectations in its expression, or leads to new thoughts and ideas, trust and satisfaction can be favored even when the answer does not have a direct correlation to the intent that existed when the question was asked. Do we understand the totality of the experience in a way that we also understand why the operator reacts and acts the way they do?

- Regulation. Given how satisfied or dissatisfied the operator is with the answer, there may be reasons to invite different ways to proceed which means staying with the tool but now regulating the completed interaction to steer towards a different answer. In the model we see adjust, expand and undo as examples of how the operator proceeds by regulating the whole, or parts, of the question. Some tools also encourage feedback by asking if you are satisfied or dissatisfied with the answer. This may imply a feedback mechanism that is used in some mode to influence the computational model. In what ways can the operator help themself, others or the tool achieve a higher degree of usefulness based on an answer already given?

Action and consequences

The action now involves taking the answer and using it for an application in some completely different context. We therefore have to think a step further, outside the communication model.

- How is the answer used to create benefits or value?

- How can we better contribute to a format, and integrations, that make the answer useful in the next stage of application?

- Who owns the answer?

- How can the answer help?

- How can the answer hurt?

The questions asked here will need to be worked out in research and interviews, but also with goal-oriented foresight. We must dare to ask the difficult questions and imagine both the desired and undesired future scenarios in order to be able to contribute to appropriate measures that, for example, minimize risks and make them visible when necessary.

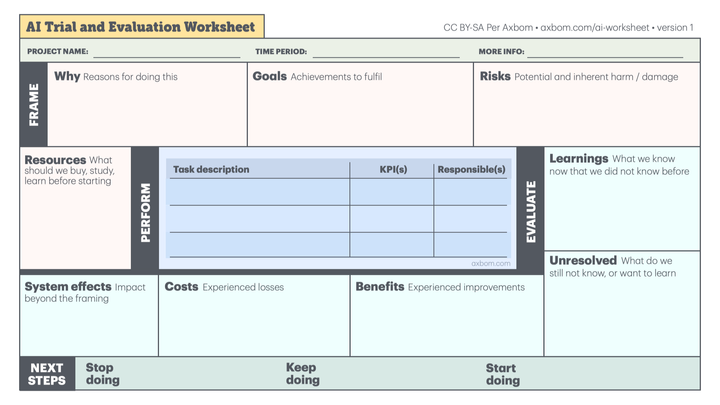

To be able to do this in a good way, we must have a clear intent and intended use for AI-based tools in our operations.

If we invest in using and integrating AI tools in a business and no one takes responsibility for the effect on people, or for defining and evaluating the benefit, then we have given up before we have started. That's when the strategy becomes to wait until something goes wrong and extinguish fires under duress, without preventive work or planned management.

This communication model is available as your support when you want to actively work to achieve well-thought-out, sustainable and compassionate solutions within the space of chatbots and AI-powered question-and-response tools.

Further reading

Comment