Apple Child Safety Harms

How Apple is moving into mass surveillance. A walkthrough of all the risks.

Apple has announced new functionality coming to their devices this autumn. The announcement has stirred up debate and damnation in the privacy community. And also disappointment among Apple users who have previously celebrated Apple's attention to privacy. Meanwhile, the purpose for these functions carry a moral imperative that can easily derail arguments and concerns for the long-term human rights impact of these changes.

This is my overview of what is happening, attempting to address a lot of the confusion that is in no small part due to the way that Apple have chosen to announce this.

Note that, as of now, these functions have been confirmed to apply to the United States. When and where further roll-out will happen has not been communicated.

Three new functions

There are no less than three things being released at the same time, and it's important to keep them separate in discussions - because they all work differently and have different purposes.

I will outline them here and dive deeper into the negative impact further on in the article. You will see the abbreviation CSAM throughout, which stands for Child Sexual Abuse Material.

1) Nudity alerts in Messages

This is functionality that can be turned on (opt-in) when people use the Family features in iOS, iPadOS and MacOS. Parents can turn this on for their children, which means the following: When a child 12 years old or younger is about to send a nude photo, or receives a photo containing nudity, an alert is triggered on their phone. What a nude is, is determined by Apple proprietary algorithms.

When sending, the alert asks if the child really wants to send, letting them know that if they do - their parents will be informed. When receiving, an image identified as containing nudity will be automatically blurred. To deblur, the child will be alerted again that a parent will be informed. For children older than 12 the alert asks them if they really want to send/deblur, but does not alert guardians.

This function will be activated for Messages, not only iMessage, which means it will trigger also for mms messages sent from devices other than Apple's own. This also means that the detection is actually happening on the person's own device, with "on-device machine learning". Meaning that Apple themselves, according to their FAQ (PDF), do not access the photo.

2) Voice and search assistance related to child abuse

When people are using assistants to ask questions about information related to CSAM, these assistants will now provide more clear guidance on the harmfulness of this type of material and where to turn for help.

Siri and Search are also being updated to intervene when users perform searches for queries related to CSAM. These interventions will explain to users that interest in this topic is harmful and problematic, and provide resources from partners to get help with this issue.

3) On-device CSAM detection

For anyone using iCloud (the default, friction-free way Apple offers to backup/share your photos and sync them across devices) a detection program will be activated on iOS (iPhones) and iPadOS (iPads). This is the action taken by Apple that has triggered the bulk of pushback from security experts across the world.

The detection program is designed to create a so-called perceptual hash for each of the photos in your photo library. In its simplest form this is a code made up of letters and numbers that is a fixed-size representation of that photo. A simple example of a hash can look like this (but will in Apple's case be much, much longer and more complex).

3db9184f5da4e463832b086211af8d2314919951

At the same time a database of perceptual hashes for photos taken from a database with CSAM content will be installed on all devices running the detection program (U.S. for now). The database is provided by NCMEC (National Center for Missing and Exploited Children). The detection program will compare all the hashes generated from your photo library with all the hashes in that database. The idea is that two photos with the same hash, or with a high enough similarity rating (we don't know how high this has to be) will indicate that the photos have the same content.

Before Apple's algorithm decides to alert Apple staff, a match has to happen more than once. They call this a threshold. This means for example that there have to be 10 matches, or X matches with a high enough similarity rating, before Apple takes any action. What the actual threshold is, we do not know.

Please note that this is still a very simplified explanation of how the matching works, and that Apple describes more layers of security protecting from photos being exposed to Apple before a confidence level is high enough to be cause for manual validation.

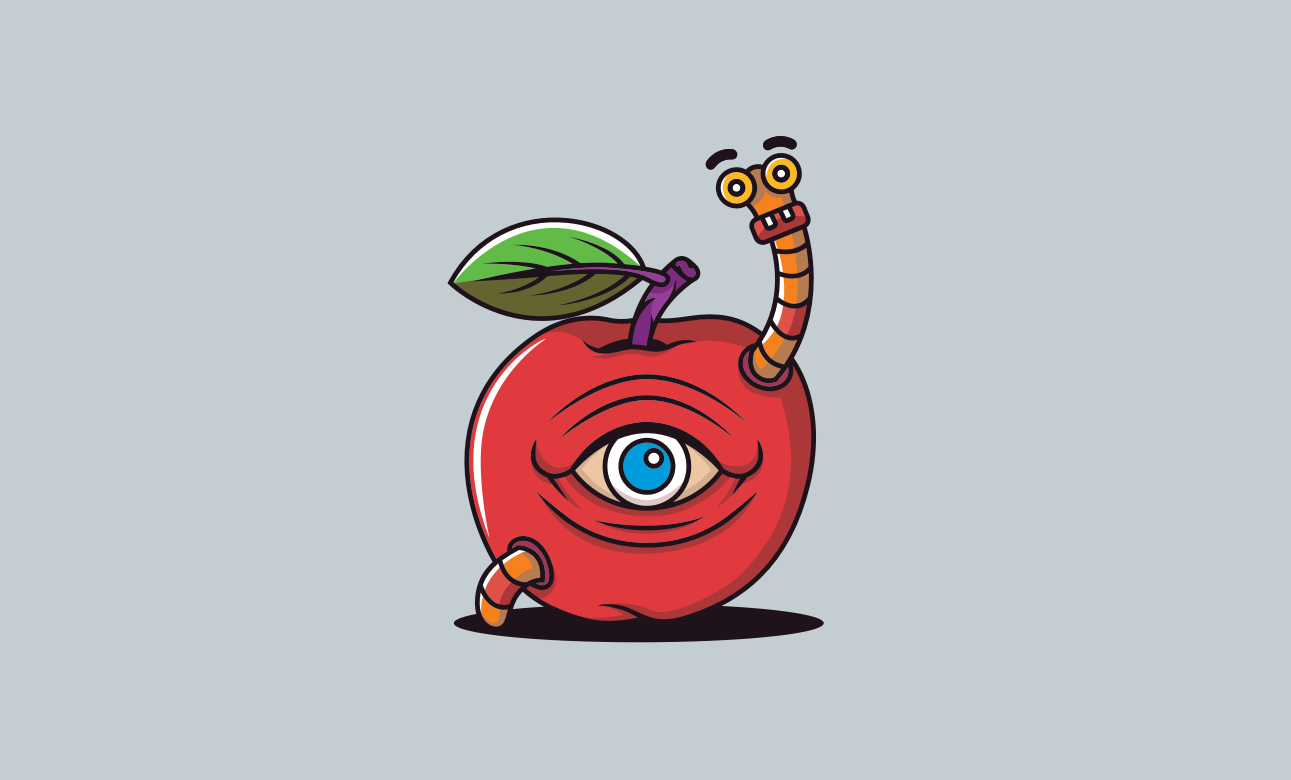

I have addressed two common misconceptions here: the first myth is that this CSAM detection is machine-learning based and will find any CSAM content. No, it will only match against the database of hashes. The common example people give is taking a picture of your child in the bath. A photo like that would not be flagged. The other myth is that actual photos are being compared, when in fact only hashes are being compared. However, the way the surveillance is carried out of course does not mean that it is not still surveillance.

But, as we will see, it is easy to get caught up in how a mass surveillance system is technically implemented, and miss the validity of the bigger question of whether is should be implemented.

One very important aspect of the hashing that is being talked about is that this is perceptual hashing, and not cryptographic hashing. Hashes are designed to find matches, not to be unique. If you want to dive straight into more tech detail around perceptual hashing, see this summary, this tutorial and then read Sarah Jamie Lewis' Twitter thread.

Purpose

Whenever making a change, that change is made for a purpose. The questions to ask are:

- What is the purpose of this change? (intent)

- Will the change achieve the desired purpose? (chance of success)

- How will we know if the change achieves the desired purpose? (goal/measure)

- What potential further positive or negative impact can this change lead to? (strategic foresight and second-order thinking)

- Are the benefits of the change greater than the potential harm? (ethical/risk assessment)

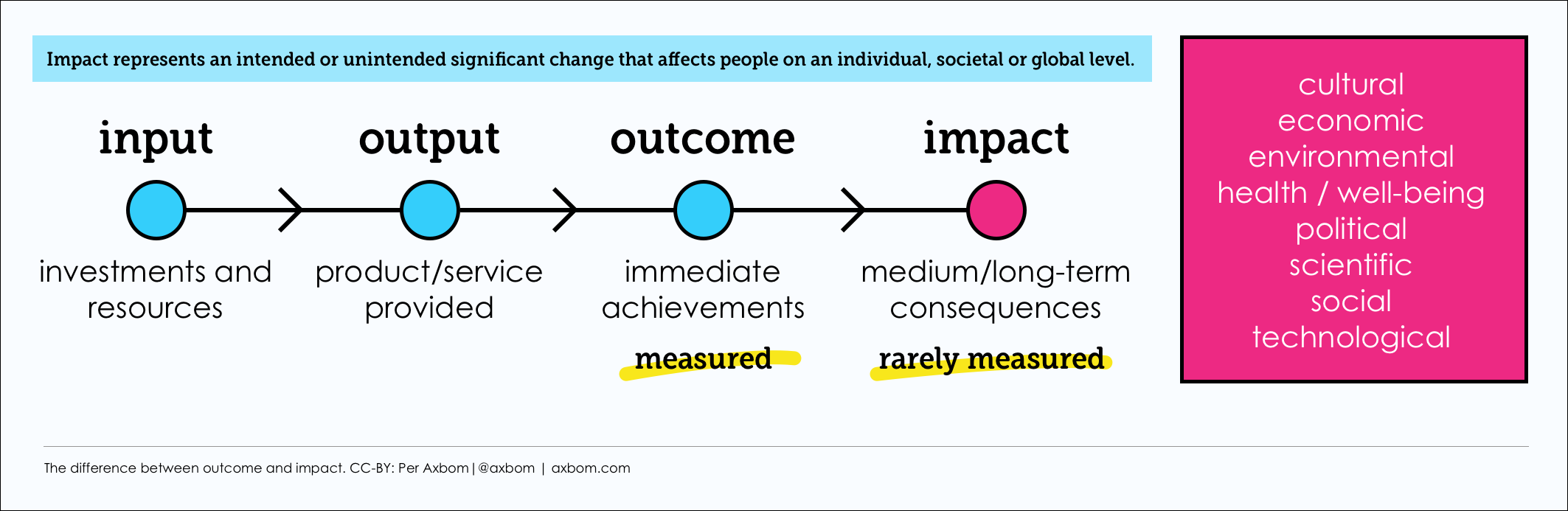

When I teach design ethics I differentiate between outcome and impact. Outcome refers to the immediate achievements of a new implementation. When looking at outcome, projects tend to measure immediate effects like:

- Did we build what we said we would build?

- Are we within budget?

- Are the performance indicators (downloads, conversions, visits) showing the expected improvement?

- Is the customer happy?

Impact is concerned with the medium and long-term human, societal and environmental consequences of a new thing. Example questions are:

- Are we improving universal wellbeing?

- Are we maintaining human autonomy and control over decision-making?

- Who outside our intended target group is further affected by this change and how?

- What societal changes may occur with widespread use of this service?

- Are we removing any freedoms?

All these questions are relevant, but Apple provides very little insight into the expected outcome or impact of their changes. This is their line:

We want to help protect children from predators who use communication tools to recruit and exploit them, and limit the spread of Child Sexual Abuse Material (CSAM).

To what extent these changes are expected to support this outcome is not clear. One obvious risk is that any such content on Apple devices simply moves to other platforms, with insignificant change to real child protection. The described outcome is elusive. But I'm getting ahead of myself.

The reason I bring these questions to your attention is to help you understand that a solution that has the best of intent can still lead to severe negative impact. And even if the outcome appears successful, the impact can be dire.

If you are curious about digital ethics, the Tarot Cards of Tech is a good place to start. The questions posed there help you become better at exploring the future and the impact of your decisions.

The Harms of Apple's decisions

An ethical way of working demands that companies apply a high level of attention to human and universal wellbeing in the development process, and do so transparently. Two things should be documented:

- The process (methods, meetings and measures) of how the organisation ensures an ethical way of working.

- The output that is necessarily the result of this process. These can be risk/impact assessments, strategic foresight reports and mitigation plans. For large companies this will also be transparency reports outlining law enforcement requests, data leaks and supply chain concerns.

When it comes to Apple's announced changes, there is very little of this type of material to peruse. It's hard to feel safe with a decision that is communicated as if there are no risks. There are always risks and negative impact. Especially with companies this large and international. Given the potential impact of these changes this lack of transparency is a glaring problem. The other part is the obscurity provided by unnecessarily complex technical descriptions. By making this about how the changes are implemented, the discussion on whether or not it should even be done is sidelined.

In the words of EFF:

Apple can explain at length how its technical implementation will preserve privacy and security in its proposed backdoor, but at the end of the day, even a thoroughly documented, carefully thought-out, and narrowly-scoped backdoor is still a backdoor.

The risks outlined below need to be discussed in much more detail. The media have to truly understand them and put pressure on Apple to reveal more on intent, what success looks like and more on how these serious risks and harms will be avoided. Because if they can't do that, the road we're heading down is looking ever more dismal.

Risk: Abusive Families

On-device detection of nudity in photos is described as a help for parents and guardians. At the top of the list of where abuse happens is of course "within families". There are several concerns here:

- Using the feature as an abusive game, forcing confirmation that photos have been received.

- Forcing a partner, child or non-family member to appear as a below 12 years old child in the Family Plan even when they are not, thus applying the surveillance far from how was intended – instead empowering abusers.

- Inadvertent disclosure of sexual preferences or gender identity that can lead to serious physical abuse or worse.

There is one safety measure applied here. The child will be alerted that their action sends an message to the guardian. But there is also no saying that the guardian doesn't already have access to the phone through its passcode. An important detail is that, once a photo has been flagged as containing nudity, the child can not delete it. This means that the guardian, or abuser, can always retrieve it from their phone.

This is an example of a freedom being removed based on an Apple algorithm detecting nudity in a photo. It removes the ability to delete a photo from the person's own phone.

Risk: False positives

When it comes to the CSAM database on your phone, the perceptual hash is designed in such a way that it triggers a match even if a photo has been cropped, rotated or changed to greyscale. These "collisions" are a feature of perceptual hashing that enables finding photos even when people try to avoid detection through image manipulation. They also mean that the system is vulnerable to false positives. Something that is not CSAM content can still trigger a match.

Apple say two things about this:

- There is a "one in a trillion" chance that false positives lead to a person's content being looked at

- They have a threshold, so there has to be at least more than one image that matches (how many we do not know)

Dr. Neal Krawetz who runs FotoForensics has some concerns:

- People these days take many photos of the same scene. So if one photo of one scene is a false positive the other ones of the same scene may be so as well. The threshold may become moot.

- He calls bullshit on the number "one in a trillion" and explains how Apple could not possibly have had access to enough data to arrive at that number.

- He gives an example of how a photo of a man holding a monkey (no nudity) triggered a false positive for CSAM in his dataset.

The final words in his article are worth quoting here.

I understand the problems related to CSAM, CP, and child exploitation. I've spoken at conferences on this topic. I am a mandatory reporter; I've submitted more reports to NCMEC than Apple, Digital Ocean, Ebay, Grindr, and the Internet Archive. (It isn't that my service receives more of it; it's that we're more vigilant at detecting and reporting it.) I'm no fan of CP. While I would welcome a better solution, I believe that Apple's solution is too invasive and violates both the letter and the intent of the law. If Apple and NCMEC view me as one of the "screeching voices of the minority", then they are not listening.

Risk: Tripping the algorithm to target vulnerable people

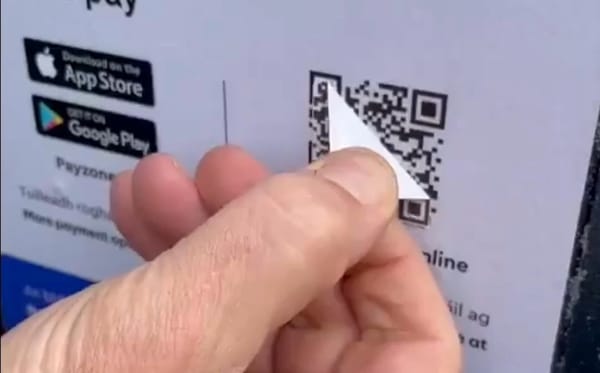

The way perceptual hashing works means that it can also be possible for tech-savvy people to find a way to create images that match a hash in the database without it being a version of the same image. By finding a way to flood such images into another person's iCloud account this will set off CSAM warnings that trigger investigations. I have previously described one way people push images onto other phones through WhatsApp.

Note the significance of the fact that the hash-matching algorithm is necessarily available on every person's device, since that is where the detection is being executed.

Risk: Database expansions to other material (mission creep)

The technical solution that is implemented does not, and can not, object to to what is in the database. While this is being announced as a CSAM database there is nothing to stop authorities from asking Apple to add more data to the same database, or even switch the database to something else. This could relate to anything from copyright searches to criminal investigation or to locate government critics or dissidents. Or people whose sexual preference is still illegal in 70 countries. A database expansion could be activated universally or on a per-country-basis at the click of a button with no consent from the owners of the phones running the search.

Let me be perfectly clear:

A private company in a western democracy is deploying mass surveillance without a warrant, on privately owned phones. While their first application is CSAM, their only safeguard against expanding that scope is... their word.

That word is about as good as when Steve Jobs promised that Apple would never develop a stylus. We really need to remind ourselves how easy it is for private companies to change their mind.

Apple have built the system that privacy professionals and activists have been fearing for decades. The safeguard against abuse of such a system can not simply be: "Oh, we would never do that". Because if that is a valid protection, this is not a question of if the system will be abused, only about when.

The people smacking their lips and enjoying this moment the most are of course authoritarian leaders in totalitarian states. They know how to use this tool that Apple has built for them. That tool is from their playbook. It's one of the most perfect tools for oppression and persecution of people they don't happen to like and want to put in prison... or kill.

Do people not know how much Apple is already compromising with the Chinese government? Of course not. Coming out of the Donald J. Trump presidency you'd imagine even Apple had become aware of how vulnerable a democracy can be. But perhaps they have already complied with a new world order. From the NY Times article:

Internal Apple documents reviewed by The New York Times, interviews with 17 current and former Apple employees and four security experts, and new filings made in a court case in the United States last week provide rare insight into the compromises Mr. Cook has made to do business in China. They offer an extensive inside look — many aspects of which have never been reported before — at how Apple has given in to escalating demands from the Chinese authorities.

Now I don't think I have to tell you that this mission creep is the most worrisome and terrifying risk of all, that has privacy advocates scrambling to object. And this is why we should worry less about technical specifics in the implementation and more about the implication for human rights in general.

Risk: Setting a precedent for mass surveillance

If Apple moves forward with this, many more companies will follow suit and mass surveillance without warrants, using personal property and deployed by private companies, will become the norm.

There is no way to later on say "Oops, we made a mistake" and walk back this development.

Risk: Prevalence of CSAM databases

While the underlying motivation of child safety can never be argued against, the dangers of how that outcome is pursued should be highlighted. There is reason to take steps even further back and question how CSAM databases themselves are collected and protected. Ashley Lake in a Twitter thread argues against the existence of CSAM databases and the lack of oversight when it comes to who assembles them and how, who has access to them and what happens if, or rather when, they are leaked. As it stands, a database of actual child pornography is owned and operated by a private, non-profit, corporation (NCMEC). All the while it would appear that children abused in the photos - and their parents - have not given explicit consent to be used in that database.

With this move, Apple have now greenlighted and cemented the existence of these databases and provided incentive for them to grow. The implementation as it stands clashes for example with EU law but calls to change that will not be far away.

Note: In the EU, the abbreviation CSEM is used. This stands for Child Sexual Exploitation Material.

Risk: Minor effects on child safety, universal impact on privacy and abuse

We don't know yet what effect Apple's moves will have on child safety. Europol have identified the key threat in the area of child sexual exploitation as peer-to-peer (P2P) networks and anonymised access like Darknet networks (e.g. Tor). "These computer environments remain the main platform to access child abuse material and the principal means for non-commercial distribution." These spaces are not being addressed here.

Meanwhile we see many potentials of misuse and abuse of human rights with the tool that Apple intends to put in place.

A small step for Apple. A giant creep for humankind.

Risk: A string of weak links

Here are a few listed sources of further harm to keep in mind:

- NCMEC's database is not an infallible, audited and 100% trustworthy source of CSAM imagery. Its content comes from thousands of different actors within the private sector, individuals and police.

- Bad actors at NCMEC can add new non-CSAM hashes without Apple's knowledge.

- Authorities can force non-CSAM hashes to be included without disclosing this to the public.

- False positives. We do not know yet how many there will be or how they will affect people's lives.

- Naive assumptions that Apple's system has no bugs and that there is no way for a malicious actor inside or outside Apple to exploit the system. With the recent Pegasus spyware hack fresh in mind, it's surprising how little media latches on to how vulnerable iPhones themselves are.

- Naive trust that Apple won't bow to external pressure to search additional types of content or expand their messages surveillance to scan, not just children’s, but anyone’s accounts. As I've explained above, Apple are already accomodating the specific wishes of authoritarian states.

Why Apple really are doing this

As you've stayed with me this far I just wanted to highlight the motivation behind this that most experts seem to be in agreement on. This is Apple's way of being able to deploy end-to-end encryption in their messaging services and still be able to appease law enforcement.

But in fact, as EFF have stated again and again, client-side scanning breaks the promises of end-to-end encryption.

For now this matters less, because the risks I have outlined should matter more.

Why on-device scanning matters

Apple of course are far from alone in scanning content for CSAM. The main difference is that Facebook et al are scanning content that people consciously upload to Facebook. Apple are now intending to scan it on the very device that you yourself own. Or at least have the appearance of owning. You can absolutely compare Facebook-scanning with iCloud-scanning, but not with iOS-scanning.

People will say: just turn off iCloud if you do not want to participate. It's somewhat disingenuous because a huge amount of people do not know how to turn off that default setting on their phone; but also are not aware of the universal risks. The other point is that given this loophole we have to again wonder: how effective is this move when it comes to actually saving children from getting hurt. And how proportional is the positive effect of this move compared to the negative impact. If this is an experiment, it's a tremendously devastating one when it comes to human safety.

The other point is this: There really is no immediate way of stopping Apple, or a bad actor with illegal access, from triggering a future scan of your phone content without iCloud being enabled. Because again, this is still all happening right on your privately-owned phone and you do not have the freedom to remove their detection software from your phone.

Next steps

People will also say: just switch phones then. As if Google's Android is a haven for privacy. A broader dilemma is of course that governments and banks have forced us closer and closer to a solid dependency on either Android or iOS to conduct our everyday lives. Their sense of responsibility in what this push eventually culminates in should also be cause for debate.

But not right now.

For now, take the time to digest what is happening. Read the source material I'm providing. Make up your mind. And if it makes sense to you, sign the Apple Privacy Letter. If you are a journalist, you know what to do.

We can never minimize the pain and suffering of sexually exploited children. We can reason around paths forward that do a better job of protection than current solutions, while also not bringing devastating harm to other vulnerable and oppressed persons. And without treating all people as a potential criminals, establishing persistent algorithmic surveillance of everyone without warrant.

Notable quotes from others

It's clear that Apple didn't consult any civil society orgs. No civil liberties or human rights input. Privacy, freedom of expression, LGBTQI+ issues, orgs for homeless queer youth, none of it. If they had, they'd be touting that (even if they ignored everything the orgs said).

Bottom line: Apple's deployment must not happen. Everyone should raise their voice against it. What is at stake is all the privacy and freedom improvements gained in the last decades. Let's not lose them now, in the name of privacy.

Clearly a rubicon moment for privacy and end-to-end encryption. I worry if Apple faces anything other than existential annihilation for proposing continual surveillance of private messages then it won't be long before other providers feel the pressure to do the same.

Regardless of what Apple’s long term plans are, they’ve sent a very clear signal. In their (very influential) opinion, it is safe to build systems that scan users’ phones for prohibited content. That’s the message they’re sending to governments, competing services, China, you.

Whether they turn out to be right or wrong on that point hardly matters. This will break the dam — governments will demand it from everyone. And by the time we find out it was a mistake, it will be way too late.

Reminder: Apple sells iPhones without FaceTime in Saudi Arabia, because local regulation prohibits encrypted phone calls. That's just one example of many where Apple's bent to local pressure. What happens when local regulation mandates that messages be scanned for homosexuality?

References

These links and more are also available in my Twitter thread.