Faux-tonomy - the dangers of fake autonomy

The word autonomy is being thrown around these days, often to imply that software is running without human intervention. But it still does not mean software can make decisions outside of the constraints of its own programming.

Software can not learn what it was not programmed to learn. Importantly, it can not arrive at the conclusion: “no, I do not want to do this anymore”. Something that perhaps should be considered a core part of autonomy.

In The Elements of Digital Ethics I referred to autonomous, changing algorithms within the topic of invisible decision-making.

The more complex the algorithms become, the harder they are to understand. The more autonomously they are allowed to progress, the further they deviate from human understanding.

I was called out on this and am on board with the criticism. Our continued use of the word autonomous is misleading and could itself contribute to harm. First, it underpins the illusion of thinking machines – something it is important to remind ourselves that we are not close to achieving. Second, it provides makers with an excuse to avoid accountability.

If we contribute to perpetuating the idea of autonomous machines with free will, we contribute to misleading lawmakers and society at large. More people will believe makers are faultless when the actions of software harm humans, and the duty of enforcing accountability will weaken.

Going forward I will work on shifting vocabulary. For example, I believe faux-tonomy (with an added explanation of course) can bring attention to the deceptive nature of autonomy. When talking about learning I will try to emphasise simulated learning. When talking about behavior I will strive to underscore that it is illusory.

I’m sure you will notice I have not addressed the phrase artificial intelligence (AI). This is itself an ever-changing concept and used carelessly by creators, media and lawmakers alike. We do best when we manage to avoid it altogether, or are very clear on describing what we mean by it.

The point I make about invisible decision-making still stands, as it is really about the lack of control over algorithms. This has been clearly exemplified by recent exposure of how Facebook engineers do not even know how data travels, and where, within Facebook.

“We do not have an adequate level of control and explainability over how our systems use data,” Facebook engineers say in leaked document.

I will, however, want to find a way to phrase my explanation in The Elements of Digital Ethics differently.

Your thoughts on this are appreciated.

Update: I have now shifted focus in that sentence from The Elements of Digital Ethics and emphasise the human factor. The two sentences quoted at the beginning of this post now read like this:

The more complex the algorithms become, the harder they are to understand. As more people are involved, time passes, integrations with other systems are made and documentation is faulty, the further they deviate from human understanding.

Can algorithms progress?

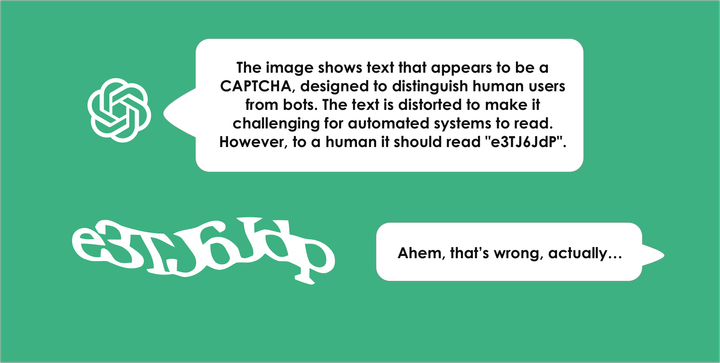

My critic, @Shamar, finally had an excellent explanation of why we can not talk about progress when it comes to algorithms that have been launched and portrayed as intelligent, and how more things around ethics and accountability become clear if we think in terms of statistical programming rather than AI.:

Progress has a political (and positive) connotation.

There is plenty of sample where such sort of bots' statistical programming included racist and sexist slur.

Do you remember #Microsoft Tay?

Did it "progress"?

The programmers (self appointed as "data scientist") didn't select each input tweet by themselves but selected the data source (#Twitter) and designed how such data were turned into vectors, how their dimensionality was reduced and so forth...

The exact same software, computing the exact same output for any given input, could be programmed by collecting the datasource before hand.

So why releasing a pre-alpha software and using "users" to provide its (data)source over time to statistically program it should clear "data scientist" responsibility?

Why it should allow company to waive their legal accauntability?

If you talk (and think) in term of statistical programming instead of #ArtificialIntelligence, a lot of grey areas suddenly becomes cristal clear, several ethical concerns becomes trivial and all accountability issues simply disappear.

So, no: there is no "progress" in a software programmed statistically over time, just irresponsible companies shipping unfinished software and math-washing their own accountability over the externality such software produce for the whole society.

Comment