UX book authors through the ChatGPT looking-glass

Alice asked the CheshireChat, that was sitting in a PC in tree, “What road do I take?” The Chat asked, “Where do you want to go?” “I don’t know,” Alice answered. “Then,” said the Chat, “it really doesn’t matter, does it?" As we engage with our curious chats, it seems entirely apt to make the observation that too few are asking themselves where they want to go. And hence even fewer ask themselves if the Chat is the best guide when asking for directions.

"It would be so nice if something made sense for a change."

I so dearly wanted to start my article with this well-known quote from the book Alice's Adventures in Wonderland by Lewis Carroll. It's on all the lists of memorable quotes from this classic nonsense literature, often alongside Carroll's name.

There is just one problem: it doesn't appear in the book. And Carroll did not write it. And where it does appear, that is not quite the correct wording.

In my efforts of due diligence to find the context of the quote it was soon evident that I had crawled down a much deeper rabbit hole than I could have anticipated. I would then go on to convince myself that this is the closest I've come to a going down a literal rabbit hole. I hope that makes non-sense to you.

Quotes are devious. They often become misattributed. And sooner or later there is a tipping point wherein the misattribution becomes akin to truth, in the sense that it is largely accepted, repeated, written down and amplified again and again.

As a metaphor for some of the more peculiar effects of a ChatGPT-enabled world, I could not have asked for a more fitting rabbit hole to fall down.

At the very end I will reveal the source of the misattributed quote, but before going way down there let's choose the road of misattributed books.

The point of this article is to help people understand how little trust one should put into tools like this in general. They can be fun, but also very harmful. It's good to have a think about who is benefitting and who is being harmed. I will be the first to admit they are great for speech recognition and to an extent also programming.

The many real authors of other people's UX books

I've noticed the considerable trouble ChatGPT has with attributions and references. They are often completely false or non-existent. And I do often wonder how often those falsehoods later make their way into published content and become "new truths". Due diligence when checking sources is somewhat of a lost art form.

And as I have demonstrated with that Carroll quote, if enough people quote the wrong source that does indeed become the new truth.

So I went down a path of looking for UX books and who ChatGPT may attribute them to. My first prompt was in regards to Jared Spool, whom I had just recently seen post something clever on LinkedIn, as he does. A clever observation may trigger interest in reading more from Jared Spool, so one might be wondering what books he has written.

- Web Site Usability: A Designer's Guide - This book, published in 1999, was one of the first books to focus on the importance of web usability and user-centered design.

- Web Anatomy: Interaction Design Frameworks That Work - This book, published in 2002, provides a comprehensive framework for designing effective and engaging web interfaces.

- User Interface Engineering - This book, published in 2003, collects a number of essays and articles written by Spool on various aspects of user interface design.

- Web Dogma '06: The Gospel According to Usability - This book, published in 2006, provides a collection of design principles and guidelines that can help designers create usable and effective web interfaces.

- Web App Success: A Practical Guide - This book, published in 2010, focuses on the strategies and techniques that are necessary to build successful web applications.

- The Anatomy of a Design Decision - This book, published in 2020, explores the decision-making processes that go into designing effective user experiences, and provides guidance on how to make better design decisions.

In fact, I happen to know that Jared has co-authored two books and contributed with the foreword to some more. Those first two books are covered (numbers 1 and 2 in the list), albeit none of his co-authors are mentioned.

- User Interface Engineering is the original name of his company, not a book.

- Web Dogma '06 appears to be a manifesto by Eric Reiss.

- There is a Practical Guide to Web App Success, but it's written by Dan Zambonini.

- The Anatomy of a Design Decision is a talk Jared did at Webstock back in 2012. Close, but no cigar.

Only 33% of that well-articulated response by ChatGPT is correct. Something to keep in mind.

Upon seeing how easy it was for the tool to list book titles, I'm thinking the name "Jared Spool" has obviously appeared in the vicinity of many book names, which is generally the primary way this tool makes sense of the world. So surely it can make up some more for me.

- Designing Web Interfaces: Principles and Patterns for Rich Interactions - This book, published in 2009, provides guidance on how to design effective and engaging web interfaces using principles and patterns.

- UX Strategy: How to Devise Innovative Digital Products that People Want - This book, published in 2015, focuses on the strategic aspects of user experience design, and provides guidance on how to create products that meet user needs and business goals.

- User Interface Engineering - This book, published in 2003, collects a number of essays and articles written by Spool on various aspects of user interface design.

- Integrating UX: Designing Seamless Experiences for Workflows and Productivity - This book, published in 2019, provides guidance on how to integrate user experience design into the software development process, and create seamless experiences for users.

- Enterprise UX: Enterprise Design Best Practices for Great Experiences - This book, published in 2021, focuses on the unique challenges of designing user experiences for enterprise software and provides best practices and strategies for designing effective enterprise UX.

Nope this time around, none of these are books by Jared Spool (or books at all), and I can't seem to find that he has contributed to them either.

- Designing Web Interfaces is by Bill Scott and Theresa Neil.

- UX Strategy is by Jaime Levy.

- Again, User Interface Engineering was the original name of his company (isn't ChatGPT just great at remembering previous answers!) and

- the last two books on that list I can't seem to find a record of. Likely they are completely made-up.

Response accuracy: 0%

But of course I'm just doing research so I'm thinking big names in UX should be writing books together. I think of a name that will obviously have many entries in ChatGPT's training data and probe about the possibility of Jared having written a book with them. In this case Don Norman.

An inexcusably harmful thing about ChatGPT is that it will not only generate random facts, it will do so with language that is overbrimming with confidence and always doubles down with extra content that makes it easier for anyone clueless about the real state of things wash down the lies. If there is just a random piece of information that seems harmless and believable, the complete quackery is eloquently disguised.

Response accuracy: 0%.

So I pick another name from the hat, UX legend Kim Goodwin. Surely the bot will have seen those names in close vicinity of each other as well.

And after that extremely poor track record of response accuracy thus far I can hear the people in the back shouting "See!! You can't fool ChatGPT!"

Hang on. So the tool responds to the actual question but does its thing and can't help adorn its response with some nonsense. Sure, Kim wrote Designing for the Digital Age. But that other book, Mapping Experiences, that's written by Jim Kalbach.

And you just wait, my friends. You just wait. Let's get the tool into the mood of books co-authored by Jared Spool and Kim Goodwin.

Not sure how this is useful or why we would want to go down this road unless I want to be a huge pain and bombard the both of them with suggestions of books they should write together. But I can't help thinking that in a world where chatbots are built to reinforce each other we may start getting these suggestions as real responses of books that have been written. Because we all know the inevitability of these generated texts being used to train the next versions of mindless tools.

Anyway, I'm a firm believer in the tool's efforts to please, and to always generate content in this endeavor. After all, that's its singular purpose: to generate content. So I'm assuming it can think of some more books that Kim Goodwin has written.

Since this is the first I'm hearing about Designing for the Digital Age being co-authored with anyone I naturally have to wonder who those co-authors supposedly are.

- Alan Cooper - A pioneer in the field of interaction design and the author of "The Inmates are Running the Asylum".

- Robert Reimann - A UX designer and author of "About Face 3: The Essentials of Interaction Design".

- Dave Cronin - A UX designer and educator who has worked for companies such as Philips, Motorola, and GE Healthcare.

- Christopher Noessel - A UX designer and author of "Make It So: Interaction Design Lessons from Science Fiction".

There we go. Four great men attributed as co-authors of Kim's book. Well, to be fair Alan Cooper did write the foreword.

Response accuracy: 0% (Nope, the author of a foreword – however good it is — is not regarded as a co-author)

I'm kind of wondering by now how the tool would help anyone who is doubtful about its accuracy gain some understanding of how off-base it often is in its responses. How does the tool itself set expectations, if you will.

In general, I would say my confidence level in my responses is around 8 out of 10. I am constantly learning and improving, but there may be times when I do not have the information required to provide a fully accurate or complete answer. If you ever have any doubts or concerns about the accuracy of my responses, please feel free to ask for clarification or seek additional information.

So I'm thinking: a tool that is clueless about the accuracy of its responses is of course clueless about most of its responses. You have to wonder where this figure comes from. If OpenAI has purposely fed something like this into the computational model, or if it's picking these numbers essentially out of a randomized probability machine as well.

This part is just wonderful: "there may be times when I do not have the information required to provide a fully correct or complete answer". Because not having the information required never stops the tool from generating content. It never stops the tool from going way past the information that makes sense, and off into nonsense.

On the other hand, the tool is actually just saying the "confidence level" is high. Like with any mansplainer the confidence level can be excruciatingly high even when the accuracy of a response is zero.

But there is another alarming aspect when it comes to ChatGPT. The apologies. It words apologies as if it actually has any form of awareness. Itself a dangerous trait to design into a chatbot. I sometimes get the impression that the tool strives to apologise more than having any encoded concern for accuracy. As such the tool provides the illusion that it is learning from you.

So I ask again if Jared Spool and Kim Goodwin wrote a book together.

In this book, Spool and Goodwin provide practical guidance on how to create a successful UX strategy, including conducting research, building prototypes, and testing with users. They draw on their extensive experience in the field to provide real-world examples and case studies that demonstrate how UX strategy can lead to better business outcomes.

I apologize for any confusion my earlier responses may have caused, and I hope this information is helpful.

I cringe at myself for using the word "think" and "remember" in my prompt. But there we go. Taking my skepticism in my prompts to heart, the tool's computational model calculates a probability of these names and this book being relevant within the word jumble of this artificial conversation.

And it manages to erase Jaime Levy, the actual author of this book. And read that again: "After reviewing my sources...". Humbug. Please understand that this tool does not review sources.

Response accuracy: 0%.

It's at this point I re-read parts of the output and notice something I myself had missed. Earlier, ChatGPT's response asserts that The Design of Everyday Things was co-authored with "other UX experts". Who might those be?

- Andrea Resmini - A UX architect and professor of information architecture and design at Jönköping University in Sweden.

- James Kalbach - A UX researcher and information architect who has worked for companies such as eBay, Audi, and LexisNexis.

- Aaron Irizarry - A UX designer and director of user experience at Nasdaq.

- Dan Saffer - A UX designer and author of several books on design and innovation.

Response accuracy: 0%.

Fantastic list of people attributed as authors of a work they had no part in. Unsurprisingly it turns out that the comprehensive and "up-to-date" view of the world that ChatGPT brings is one littered with the equivalent of deception, bias, wild guesses and nonsense. But knowing how these tools work they are of course the types of responses that should be expected.

If you don't know what road to take going forward with these tools, a road will certainly be chosen for you. Using a tool like this for any type of work involving a concern for accuracy, or a wish of being anchored in reality, can obviously backfire in a big way.

We can only speculate to as what happens when this very article is indexed by the machines. Will errors be reinforced by the errors repeated on this page? At least I call out the errors, but there is no real way of knowing what a language model will make of it.

Please don't use the word 'hallucinating'

You may have seen people in the industry brushing this off as "hallucinations".

But there are a couple of problems with giving the impression that these are like hiccups in the machine.

- Hallucinating is something that living beings do. It attributes a sense of awareness to the tool that is uncalled for. The tool is also not lying. It's actually just generating text exactly as it is supposed to, and should be expected to be doing. It is following its programming. Being a perfectly fine language model and bot. Just with extremely misleading marketing labels.

- Generating content that differs from fact isn't a bug, it's a feature. The tools aren't built to fetch content from trusted sources. Hallucination implies that the tool is off-course. But a tool like ChatGPT is built to generate text that matches a high probability of looking like human-written text. There is no promise of adherence to something that is true. For many use-cases one might have better luck than I had today, but for many use-cases things will be worse. How will you know which it is unless you already know the facts yourself?

- Instead of taking responsibility for the tools that they themselves built, manufacturers like Google, Bing and OpenAI can claim, by using the word hallucination, that the tools are doing something that is outside their control as makers. From one perspective that may be the case, but why should these companies be allowed to release tools into the world that they themselves can not demonstrate any control over?

To underscore that last point, imagine an AI-assisted car that every day you drive it swerves and breaks at irregular intervals. The manufacturers, hoping to avoid accountability, describe this as "hallucinations" and they really can't say what's causing it. Imagine everyone shrugging and saying... "well, that's fine then".

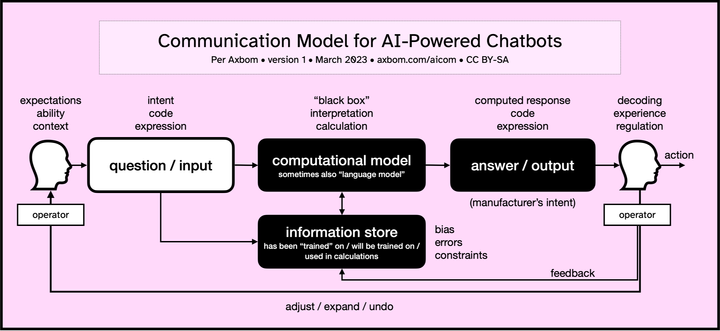

Rather than hallucinating, the language model tools are actually doing what they should be expected to be doing because it's what they were built for: generating randomized content using a probability engine to calculate the likely validity of the words and sentences. Validity here means that it resembles how people write. It's not the resemblance of truth that is judged within the computational model.

Naomi Klein says it better in AI machines aren’t ‘hallucinating’. But their makers are.

Moral of the story

“ You’re thinking about something, my dear, and that makes you forget to talk. I can’t tell you just now what the moral of that is, but I shall remember it in a bit.”

“Perhaps it hasn’t one,” Alice ventured to remark.

“Tut, tut, child!” said the Duchess. “Everything’s got a moral, if only you can find it.” And she squeezed herself up closer to Alice’s side as she spoke.

Allow me to leave you with these three topics of consideration in your pursuit of tools that assist you on your travels down AI-infested roads. Hopefully well-chosen roads that align with where you want to go.

1) Who or what is being amplified?

The people who are more present than others in the training data will naturally surface in generated text more often than others. This of course also makes it more interesting to reference people that generate more intriguing results. Amplification happens simply through making use of generated text. And it doesn't only apply to people but of course also to ideas, biases, prejudice and misattributed quotes. And when publishing those generated texts.

2) Who or what is being erased?

Erasure happens already at the point of manufacturing a tool, wherein a limited dataset is used, however large it may be. Not everything is found in digital form, in a specific language. And some things that have later been proven false can still be expressed as truths in masses of content.

But erasure happens also during use, in which a tool performs better with some people and ideas than others. And when the amplified people for example become attributed as authors of books they were never involved in. Erasure also happens by way of who has regular access to the tools and who does not.

3) How do we recognise and manage a self-reinforcing loop?

To become better the tools need more data some will claim. Yet we are now at a stage where more and more of that data is generated by the tools themselves. Is there even more data to even anything out, or to presume an improvement is possible. Not everyone thinks so. At what point do we deem that a self-reinforcing loop is a problem, how do we recognise it and what is our plan for managing it?

The questions these tools raise are serious and should be of great concern for many who work in the space of ideas and invention and progress. One of my fears has long been that rather than contribute to new thinking and innovation, the tools stop humanity in its tracks, gluing us to a mindset shaped by the pride and prejudice they inevitably both mirror and enforce.

There are certainly more things to be concerned about and I run workshops to help organisations approach these modern beasts with a little more caution and guidance.

Disney's role in literature

The misattribution of that Alice quote I mention at the start wasn't too far from the attributed source. Christopher Kelly found it for me in a script from the 1951 Disney movie Alice in Wonderland. And I extracted a clip of that scene, just for you. The line, worded slightly differently than it is reproduced in most quote compilations online, appears towards the end.

It's the most convincing kind of non-truth. A remix of the original book writer's work after his passing. His name on the package and an obvious homage to his creation. Disney certainly have a way of finding an audience for stories that are loosely based on the original, and making those stories the new points of reference for accuracy of the storyline and content.

For avid readers of Alice's Adventures in Wonderland this scene takes place between chapters 7 and 8 of the book. In Chapter 7: A Mad Tea-Party, she leaves the tea party through a door in a tree, exclaiming “It’s the stupidest tea-party I ever was at in all my life!” She then enters the hallway, nibbles on a mushroom to make herself smaller and walks down to the garden that reveals itself to be the scene of Chapter 8: The Queen's Croquet-Ground. The quite long transition scene between these two chapters in the Disney film (longer than my clip)– with a sad Alice lost in the woods – does not appear in the book, and neither do most of the odd animals and creatures from that scene.

It's almost like I can hear a thousand protectors of the CheshireChat calling out: "Hey, it may not be the truth but it certainly could have been!". An argument that could have been straight from the book itself.

Alice's Adventures in Wonderland has long been one of my favorite books. But I certainly did not expect to be so clearly living inside of it.

Bonus

Did I ask ChatGPT where the quote is from? Why yes, yes I did. After a few attempts of not finding the quote in the book Alice's Adventures in Wonderland, the bot goes on to claim that it's from The Hitchhiker's Guide to the Galaxy. After not being able to quote a passage from there either, it finally generates a fake passage from the book. I'm not even joking. You can read the full transcript here:

AI ethicist Dr. Casey Fiesler tried the same thing and for her ChatGPT generated a completely fake passage from Carroll's book.

Also read

How I can help you learn more

How you can help me

Comment