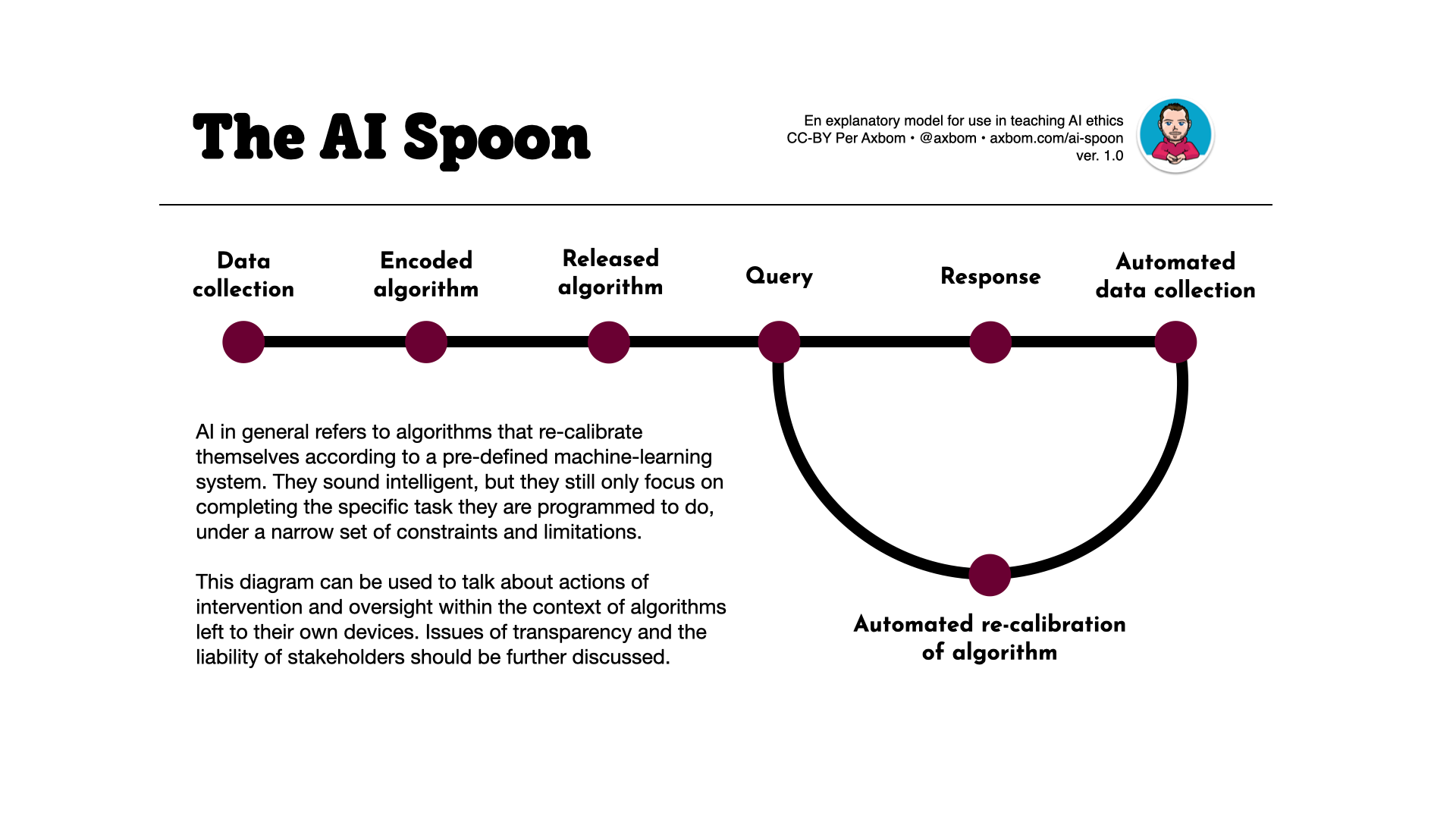

The AI we know and use today most commonly refers to algorithms that re-calibrate themselves according to a pre-defined machine-learning system. They sound intelligent, but they still only focus on completing the specific task they are programmed to do, under a narrow set of constraints and limitations.

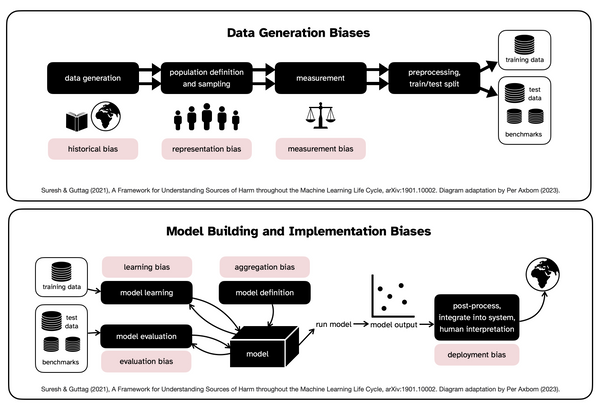

This diagram can be used to talk about actions of intervention and oversight within the context of algorithms left to their own devices. Issues of transparency and the liability of stakeholders should be further discussed.

The AI spoon is an explanatory model that came to mind as I was thinking about the difference between algorithms and the type of AI that we come across in the world today, known as narrow AI. The tool takes its name from its shape, a long line (the handle of the spoon) that contiunes into a bowl-shape.

The steps in the handle of the spoon are "data collection", "encoded algorithm" and "released algorithm". This refers to how an algorithm is built, based on some body of data, and released into the world.

As the line continues into the bowl-shape the first two steps are "Query" and "Response". These refer to the purpose of an algorithm, which takes actions based on some trigger.

The bowl then continues with "Automated data collection" and "Automated re-calibration of algorithm" ending up back with "Query". These steps explain what generally makes the algorithm an AI, in that it can re-calibrate - without human intervention - to give a new response to the same query.

Let me know how you use it and if you think I should make further clarifications.

Member discussion