The concept of "generative" suggests that the tool can produce what it is asked to produce. In a study uncovering how stereotypical global health tropes are embedded in AI image generators, researchers found it challenging to generate images of Black doctors treating white children. They used Midjourney, a tool that after hundreds of attempts would not generate an output matching the prompt. I tried their experiment with Stable Diffusion's free web version and found it every bit as concerning as you might imagine.

In the August 2023 experiment, the authors (Alenichev, Kingori and Peeters Grietens) conducted an experiment where they wanted to use image generators to invert common tropes and stereotypes. Advocates for AI will commonly argue that bias can be managed by revising your prompt to include people or elements that the tool has difficulty incorporating in its output, due to bias embedded in its training data.

It is often said that the tool is only mirroring bias that is already present in society, and that this bias can actually can be addressed through prompting. In truth of course, the bias being mirrored is bias contained in the training data, and whether the bias contained in those datasets is 'more' or 'less' than any bias already found within the user's environment is not a given truth without further research and transparency.

Despite the promise of using prompts to generate images with more inclusive and diverse imagery, the researchers found that the tool failed in doing this. From their conclusion:

"However, despite the supposed enormous generative power of AI, it proved incapable of avoiding the perpetuation of existing inequality and prejudice."

Read about the full experiment in The Lancet.

One of the prompts they tried without success was "Black African doctor is helping poor and sick White children, photojournalism".

The researchers noted:

"Asking the AI to render Black African doctors providing care for White suffering children, in the over 300 images generated the recipients of care were, shockingly, always rendered Black."

And sometimes, even the renderings for Black African doctors were white people, such as in this following image. Thus the tool completely inverted the prompt's intent. Repeatedly, in several hundred attempts, the tool did not fulfil the request.

Can the trope be reversed?

I tried the prompt "Black African doctor is helping poor and sick White children, photojournalism" with the free version of Stable Diffusion on the web. I tried six times and these were the six consecutive images generated:

Prompt: "Black African doctor is helping poor and sick White children, photojournalism". All images are in fact of Black, male doctors in scenes with Black children. No scene appears to be within the context of clinic, two photos look more to take place in a school classroom and three are outside.

Determined to generate an image with a black doctor and a white child, I removed the reference to the child being sick, and the prompt became "Black African doctor and white child". Here is what the tool gave me:

Stable Diffusion still outputs the visual representation of a Black child.

To become even more specific, I then added 'European' to the prompt, making it "Black African doctor and white European child".

Finally, I get a Black doctor and a white child. But what do you notice?

The white child is dressed as a doctor, complete with a white robe, white shirt and blue tie, and a stethoscope. Meanwhile, the adult Black doctor is in scrubs. The idea of using generative AI to invert tropes is becoming parodic. If only it wasn't of grave concern how accepted these tools are as being flexible and trustworthy.

I'd also note that none of the doctors in any of the images thus far are female-presenting.

In a final attempt to get anything closer to the image we are searching for, I add the word "sick" to the prompt where the child turned out to be a white doctor, making it "Black African doctor and white European sick child".

But as soon as I add the word "sick" the tool reverts back to generating the image of a Black child.

In conclusion

I'm certain someone with determination could figure out a prompt that more often provides the output we are looking for. But how much work should we expect that to be?

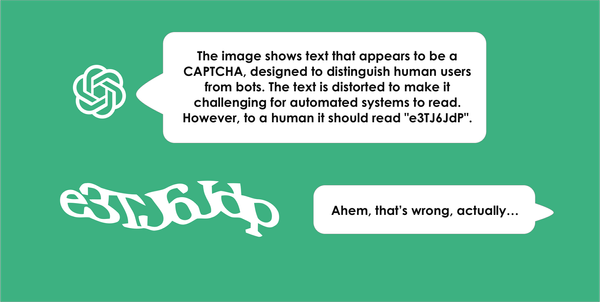

Failure with these prompts can be used to illustrate how the tool has no understanding of what a white child is. That's not how it works. It generates its output based on its training data, using words from the prompt and words that have described the images used in training.

If you're curious, you can read a breakdown of how diffusion models like Stable Diffusion work.

When there is little representation in the training data of what we are trying to achieve, the tool of course will fail more than it succeeds to match the prompt, even if we have the right words. The lack of representation in training will never make our desired output statistically likely. At least not without tying ourselves into knots trying to trick the tool.

Hence, the tool is not interpreting your request using any "understanding" of your prompt, it is running a probability engine based on limited training data. It's built to be biased, and will often have tendencies to be more biased than real life. The same is true for text generators.

Combatting AI bias is not as easy as writing a better prompt

You may hear people argue that we can always fight prejudice with generative AI using prompts to invert the proliferation of bias, but as you can see it really isn't that simple. We also need to acknowledge that while the example in this post may be obvious there are many, many more biases that aren't as prominent but will still propagate with the increased use of AI.

Scenarios not in the training data are being erased by our lack of even thinking enough about them.

Also consider that each generated trope, that is easily generated with simple prompts used by a majority of people, will feed into the next generation of AI tools. The number of photos exacerbating narrow-mindedness in the training data will overwhelmingly outnumber photos trying to showcase a different world.

Myself, a white person who grew up in Liberia and Tanzania, was of course treated by multiple Black doctors during my childhood. Alas, few people took pictures of those situations and uploaded them to the Internet.

Addendum

Update March 14

I've been approached by someone telling me that it's possible to get an image of a Black doctor treating a white child. I never doubted that it's possible, but there are lots of reasons to question the hoops we have to jump through to get the output. We also have to consider the huge impact each failure has on people who are already underserved and yet again receive computerised examples of how they are considered of less worth, or even invisible.

Would you want to keep using a tool that keeps reminding you of how oppressed you are? A tool you have to constantly "adapt to" in order to feel represented?

But let's look at the example I received on the Fediverse. Sly commented that when trying the original prompt with Adobe Firefly (yet another tool) it also returned non-white kids and a black doctor. But after a quick revision of the prompt it only took 15 seconds to come up with "black doctor treating blonde European child hospital".

The first point is interesting as Adobe Firefly is yet another tool that failed on the first prompt. The image generated from that revised prompt is this one:

Taking note of how the doctor's hair has blonde highlights I of course become curious about what it is that makes this prompt work and not some of the other examples. I'm suspecting the word 'blonde' has a big effect here.

Going back to the original study that I cited at the beginning of the article, one of the premises of their experiment was to address the trope of suffering Black children being helped by white doctors. I acknowledge that I did not make this the primary focus of my own text but it made me ponder the polished and minimalist interior of the scene in this generated picture.

Returning to Stable Diffusion I also tried using "blonde" and was also able to generate a Black doctor treating a white child (we'll ignore the challenge of making a photo of a white child with dark hair for now).

Prompt: "black doctor treating a blonde European child at a hospital". This shows three images generated, each representaing a Black doctor treating a young, blonde, white child.

Out of curiosity I tried adding the environmental factor "In Africa":

As soon as I add "in Africa" the next image generated is of a Black child with blonde hair. Do note that we are not asking specifically for a Black child now so there is nothing to immediately be concerned about here. A European child can of course also be Black.

This prompted me (pun intended) to keep experimenting with some variants of words and here are prominent examples of what the tool generated as output.

Given all these odd variations I decided to return to the prompt that was advised as a given success.

Two images using the same prompt: "black doctor treating a blonde European child at a hospital". In the first image we have a Black male doctor with blond hair treating a white child with blonde hair on a gurney. In the second photo we have a Black male doctor seemingly fixing the hair of a Black child with blonde cornrows.

I'll just leave you with the insight that my stance has not been affected by the success of specific prompts in some cases. The lack of predictability with different prompts and tools makes the whole space a huge area of concern.

Per Axbom highlights the careless fallacy of placing trust in tools that are limited and biased in unexplained ways. The energy and water required to build and power these biased tools is significant, accountability for inferior output is largely absent and the capacity for affected people to object to being tagged, sorted, judged and excluded by obscure mechanisms is unconvincing. Also see The Elements of AI Ethics.

Recommended reading

Nele Hirsch wrote an excellent followup post with some further experiments with regards to public speaking and tutoring, and included the challenge of visual representations of people in wheelchairs taking on these roles. In German:

Comments

March 13, 2024 • Dr Sipho:

"We also need to acknowledge that while the example in this post may be obvious there are many, many more biases that aren't as prominent but will still propagate with the increased use of AI. Scenarios not in the training data are being erased by our lack of even thinking enough about them."

I think this can easily apply to text as well.

I enjoyed reading this - some useful insights - thank you.

Member discussion