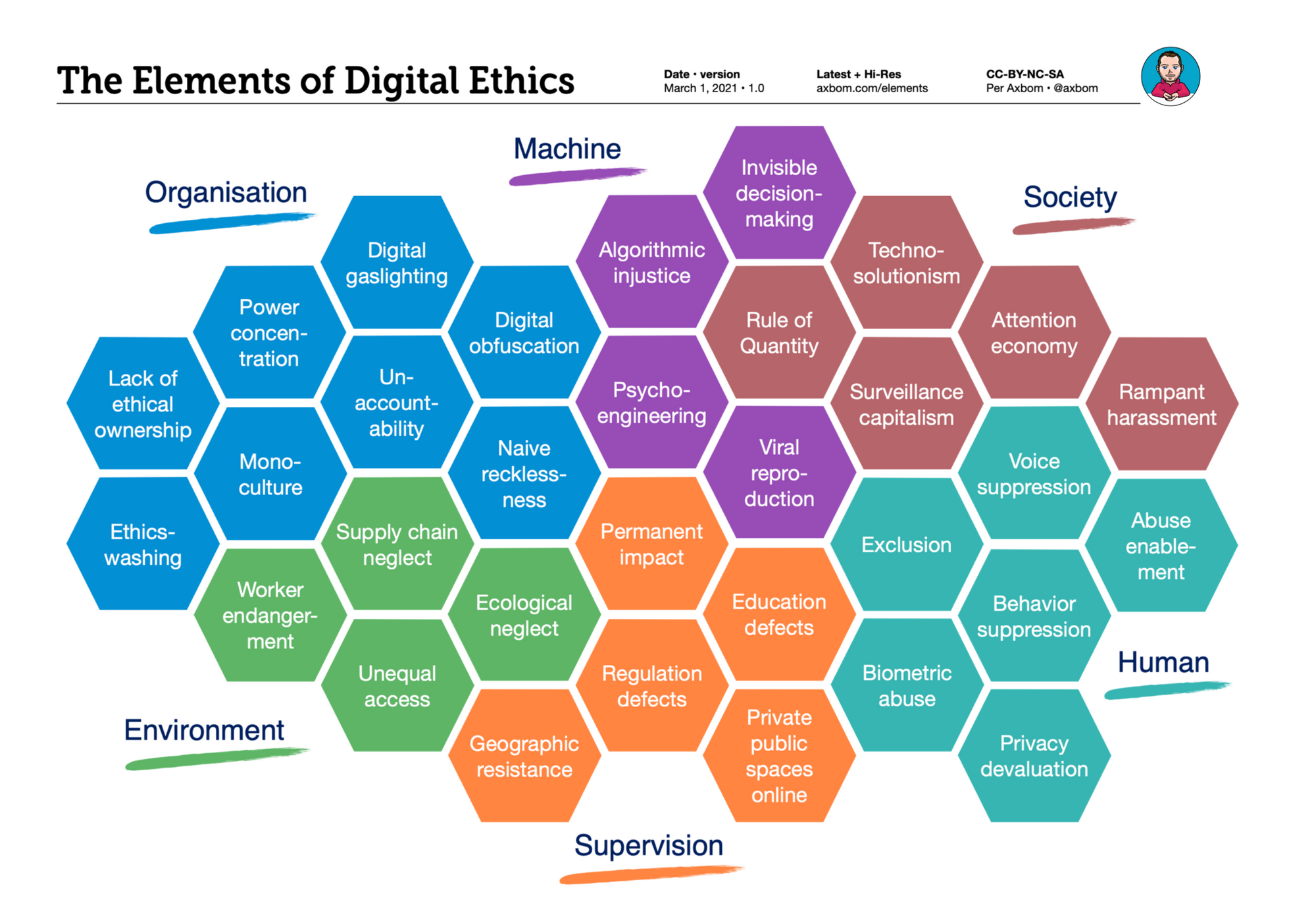

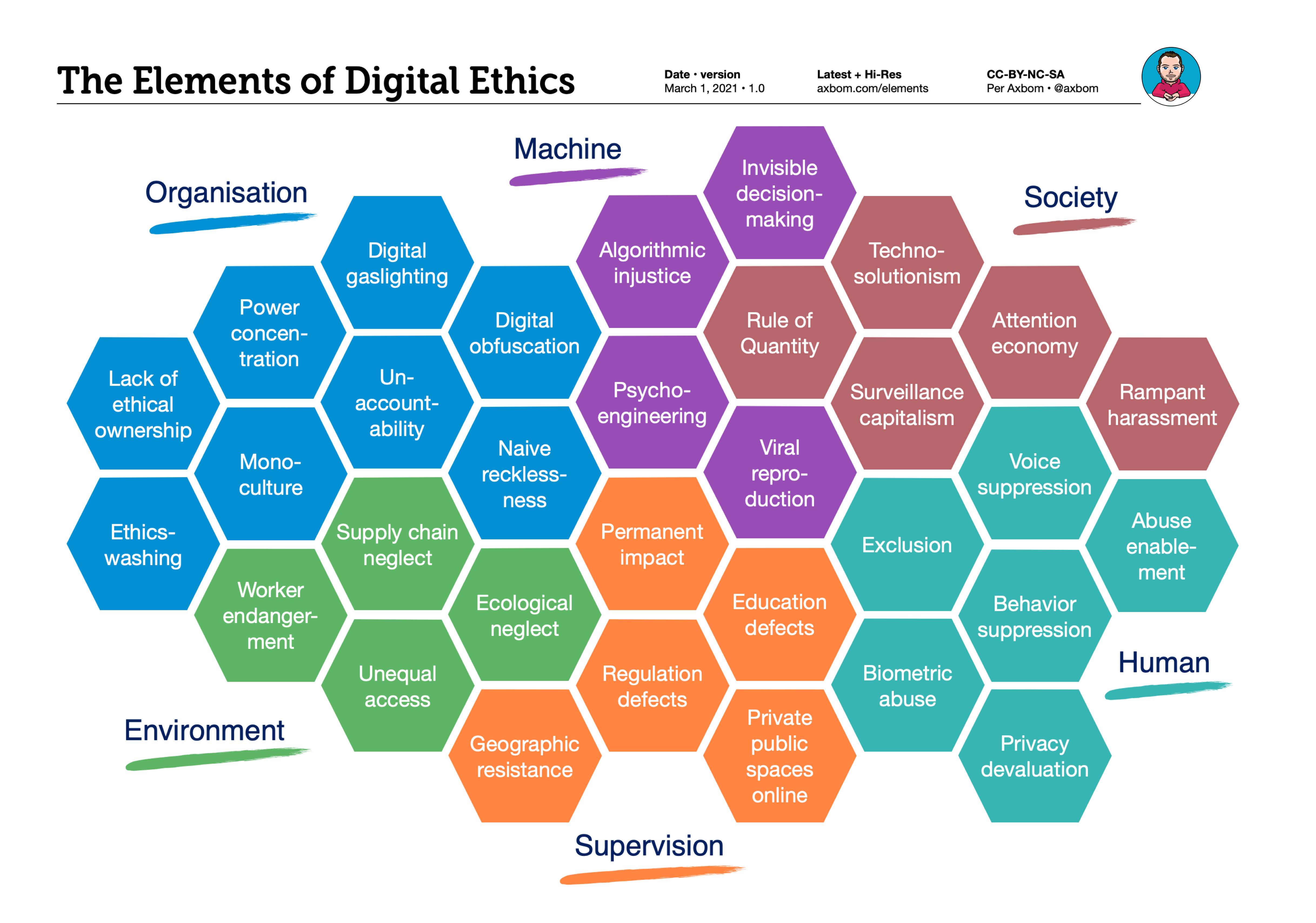

I produced this chart – after months of research, reading and rumination – as a summary of the many ways digital holds potential for negative impact. Alongside the chart I've provided a brief summary of the meaning of the currently six sections and 32 elements.

I am not an opponent of digital. I am a proponent of responsible innovation. To make responsible choices you need to understand what could go wrong. The chart is not meant to dissuade but rather to make aware and encourage more careful and considered choices in a digital world. Feel free to use the chart as an everyday tool, as a conversation starter and as an outline for teaching.

Digital ethics refers to a form of applied ethics wherein organisations and professionals work with the intent to eliminate and mitigate harmful outcomes that result from their work with digital products and services. It is a practical application of moral considerations in the tech space. The chart outlines topic areas that contribute to moral dilemmas that are relevant for examination in the practice of digital ethics.

What follows are brief summaries of the six sections and their underlying elements, 32 in total:

Organisation

These elements apply to the qualities and concerns of organisations that develop digital services and products in both the private and public sector.

Lack of Ethical Ownership

When nobody in a company or organisation has the explicit responsibility of ensuring ethical consideration in day-to-day operations, any initiatives will be unstructured and vulnerable to neglect. Lack of ownership makes organisations complicit in activities that lead to negative impact.

Ethicswashing

Ethical codes, advisory boards, whitepapers, awards and more can be assembled to provide an appearance of careful consideration without any real substance behind the gloss. Ethicswashing may also direct attention towards investments and donations in goodwill projects, which upon further scrutiny prove to be of little value with regards to reaching the expressed goal of mitigating negative impact of the digital services and products themselves.

Monoculture

Through the lens of a small subset of human experience and circumstance it is difficult to envision and foresee the multitudes of perspectives and fates that one new creation may influence. The homogenity of those who have been provided the capacity to make and create in the digital space means that it is primarily their mirror-images who benefit – with little thought for the wellbeing of those not visible inside the reflection.

Power concentration

When power is with a few, their own needs and concerns will naturally be top of mind and prioritized. The more their needs are prioritized, the more power they gain.

Unaccountability

There are very few mechanisms in place to hold the creators of digital solutions accountable for any number of real-world harms instigated by the design and development of tech services and products. Often both machine-makers and lawmakers are unaware of harm. At other times the machines themselves are perfect scapegoats.

When machines are sold, they are lauded as the epitome of faultlessness. When machines fail, it is because "it is what machines do", and with more financing they can once again become fault-free. Human creators appear always devoid of responsibility.

Digital gaslighting

The advantage of knowledge opens the playing field to framing concerns and hesitancy as the fault of the user or someone else. When users are harmed they are sometimes led to believe it is by their own doing, and deserved.

Digital obfuscation

It is easy to hide far-reaching agreements and terms in far-from-intuitive digital locations and in walls of content obscured by deceptive language or innocent phrasing.

Naive recklessness

In the wake of overconfidence and a naive understanding of both the value of data and the security of online storage, personal information is often haphazardly managed and accessed by problematic actors.

Machine

As more and more decision-making is automated, more humans must bow to algorithms, machine learning and obscurity. These Machine elements describe the inherent qualities of machines that exacerbate potential harm.

Algorithmic injustice

Nothing that is built can be considered neutral. Everything created mirrors the values and beliefs of the creator - consciously or not. We can not escape power imbalance and we can not escape prejudice as these are necessarily coded into the automated decision-makers. Worryingly, the prejudice and its impact can easily be made exponentially more efficient, essentially increasing the reach and severity of harmful outcomes.

Invisible decision-making

The more complex the algorithms become, the harder they are to understand. As more people are involved, time passes, integrations with other systems are made and documentation is faulty, the further they deviate from human understanding. Many companies will hide proprietary code and evade scrutiny, sometimes themselves losing understanding of the full picture of how the code works. Decoding and understanding how decisions are made will be open to infinitely fewer beings.

Note: I previously used a description of algorithms progressing autonomously here. This has been removed as it can mislead with regards to accountability. See my post on Faux-tonomy. (Updated May 1, 2022)

Psycho-engineering

With infinite interconnected data points about individual biology and behavior the machines will know more about us than we ourselves could ever know. This allows for prediction and modification of behavior in unprecedented and unpredictable ways. While behavioral economics still has an undercurrent of attention to human consent, when authority shifts from human to machine it will be ever more difficult to determine who is in charge. It's one thing for persuaders to be hidden, another for them to hack humans with razor-sharp precision, all the while having humans believe they are acting on their own.

Viral reproduction

Anything created digitally can have an exponential impact far outside the control of the creator.

Society

The advent of digital has repercussions for society at large, influencing value-systems, opportunity, fear and safety to name a few. Things that were not previously possible are top of mind and often framed in savior-like qualities promised by digital transformation. New-found abilities and efforts to record and measure everything in numbers afford less attention to spirituality, compassion and autistic sensitivity.

Rule of Quantity

Faster, bigger and more efficient have never been easier to measure and anything that can be awarded a number, rank or score often is. This is rarely out of necessity, or with a strategic end in sight, but more commonly because "it's possible". Humans are more often judged by a number, a numbers game that now permeates throughout business, medicine, physical exercise and even the quantity of friends.

Each time we move fast enough to miss desired time with our loved ones we have an opportunity to absorb this lesson of natural balance: everything can not be more at the same time.

Technosolutionism

When stumbling upon a new societal challenge there is widespread belief and determination that digital technology should be used to solve it. This dogma is quick to dismiss non-digital suggestions. Ingrained in technosolutionism is of course a deep conviction that technology can solve problems but also often a glaring disregard for technology's ability to create problems.

Attention economy

Modern society is a competition for attention in mannerisms that strive to create opportunities for attention where there previously was none. This makes it profitable and desirable to push for less sleep, support less time with loved ones, encourage multitasking and engage in behavior tracking and modification.

Surveillance capitalism

The more we know the more we can persuade. Data about humans – coupled with their attention – allow organisations to target people when they are at their most vulnerable to specific messaging. This vastly increases chances of a desired behavior or purchase taking place. The one that knows the most has the most power and can charge the highest prices. The market rewards them rather than question the surveillance required to gain that power.

Rampant harassment

The platforms enabled for greater numbers and greater attention also empower each and everyone with enhanced abilities for bullying, provoking and tyrannizing at scale and for personal enjoyment. Digital harassment and terror can happen anonymously and 24/7, exposing a great number of people to trauma and suffering that is ever harder to evade as society demands their digital availability.

Human

While most aspects of digital ethics are related to negative impact on human wellbeing, certain elements hit closer to home and are more direct and tangible in how they impact human wellbeing.

Exclusion

Language, citizenship, physical ability and a multitude of other reasons exclude people from equal access to digital. And yet when digital solutions carry potential to include most everyone, digital is still designed in ways that keep the proverbial door closed. Organisations choose to dismiss human beings on the grounds of 'edge-cases', financial constraints or standardized ways of working.

Voice suppression

Rarely-heard voices are not sought out to participate in digital development. Instead the already strong voices are heard and enabled and find their needs met as the silent voices fade even more. The suppressed voices, not invited to the platforms, find themselves without one. When they are invited, some people will still struggle to communicate equally well on digital platforms that require a certain level of experience and aptitude in writing. Uncertainty and anxiousness is more easily identified in physical meetings while online they can go unnoticed.

Abuse enablement

Technology designed to control things prove themselves equally capable of controlling beings. In abusive relationships the abuser now wields power over lights, doors, communication, cameras and location-enabled devices. The systems fail to provide protection for those who find themselves targeted by tech-enabled abuse.

Privacy devaluation

To gain access to services, paying with personal information has become norm and often accepted without second thoughts. Understanding is generally low of how personal data is stored, how much is given away and how it is used. At some point the experience evolves into resignation, accepting the terms not because of a good deal but because the exchange has been normalized and there is a sense of being too late for regrets. There is a concerning tolerance of sharing not only your own data but also the data of friends and family. And the desire to understand more appears to dwindle with time.

Behavior suppression

Anything we do and say can end up on the Internet. Anything we do and say in public can be recorded. Anything we do and say at work can be measured to determine performance. We know our purchases end up in a database because rewards programs tell us what we buy. Our locations are tracked. Meeting software can gauge our "attention". The freedom to act is always inhibited by apprehension of how that act will be perceived out of context in the eyes of an external assessor. The number of assessors keeps rising.

Biometric abuse

Collection of biometric data is on the rise while neglecting the permanence, and thus immense delicacy, of this data. Once lost biometric data can rarely be reclaimed, and it can be used to identify even where anonymity has been promised. The continous collection of vast amounts of data about humans are creating biometric markers where many did not expect it: beyond fingerprints, face recognition and gait, there is already use of heartbeat recognition and keystroke biometrics.

Supervision

This section relates to the difficult practice of nationally controlling and managing the potential negative impact related to digital services and products.

Permanent impact

Many of the problems in the digital space are triggered by actions that are not easily reversed. When data has been shared, it can been copied endlessly. When a fingerprint has leaked, it can not be changed. When a machine learning algorithm has been implemented, few can understand it and even fewer change its behavior. Additionally, the values of today are coded into decision-making systems disregarding how the values of societies change over the span of a few decades. The prejudice of today is what we are teaching the digital systems that make decisions tomorrow.

Education defects

Integrity, ethics and privacy laws are rarely on the curriculum of the training programs that have given us the digital professionals of today. Often programming and design skills are self-taught or gained through online courses with no cross-disciplinary teachings. While demand for digital developers and makers continues to rise, employers pay little attention to an ability to care for, and reason around, human wellbeing.

Regulation defects

Law and policy often attempts to target each new technology individually while missing the bigger picture. Change happens at a higher rate than regulation can manage, which indicates that what the regulation should begin to manage is the rate of change itself and the premises for change. How many times during a lifetime should a citizen need to relearn how to apply for a job, buy a bus ticket or book a doctor's appointment – and who should bear the cost of that change?

Private public spaces online

Many countries have moved towards the informal acceptance of privately-owned services as platforms for public discourse and free speech. Still the control of the platforms and their participants are determined by the private companies and not by the state, and equal access laws rarely apply to private companies in the same extent as to the public sector. This creates many grey zones and confusion with regards to accountability, accessibility and responsibility, all while affecting people's ability to participate in an open society.

Geographic resistance

When trying to manage digital services, governments are to a large extent limited by physical boundaries and jurisdictions that the digital systems are happy to ignore. Many countries find their citizens adopting tools and services that are difficult to influence through national or regional law.

Environment

The elements in this section refer to problems that are created and/or overlooked during the making of digital tools. They are often ignored because they are considered secondary conditions and do not relate directly to the intent of what is being created. Sometimes seen as untouchable or unavoidable, these elements still need to be addressed or managed to mitigate negative impact.

Worker endangerment

In services where millions of users convene to publish content there is always percentage of harmful and illegal content that needs to be filtered and removed. To manage this, low-wage workers may have to sift through hate speech, violent attacks against animals and graphic pornography. Workers are also endangered by the mere knowledge of understanding the harm their work contributes to, while under duress or external pressure that makes it difficult for them to refuse contributing to that harm.

Supply chain neglect

To realise digital services and solutions we need hardware and software. Behind the production of these there can be any number of oppressive relationships and exploitation of workers. One example is the need for cobalt, used in lithium batteries, which is often mined under unfair conditions in oppressive environments reminiscent of slavery. Failing to consider one's own part in the supply chain required to deploy digital services is to ignore accountability for potential harm to others.

Unequal access

Only slightly more than half the world's population has access to the Internet and within different countries access to Internet and specific services will vary. In some countries the Internet is systematically used as an oppressive force by closing and opening access during unrest. Often talked about as open, it is important to recognize how the internet is closed to a significant number of people around the world, and to reflect on the consequences of ignoring them.

Ecological neglect

As digital services grow they demand more in terms of energy use, further transportation of goods, and require data centers that displace physical land and communities. Again, it is easy to overlook the overall environmental impact of creative work in the digital industry when it is not being measured, and other measurements take precedence. As always, all variables can not grow for the better at the same time – and we need to be aware of what grows for the worse based on our actions.

How to use

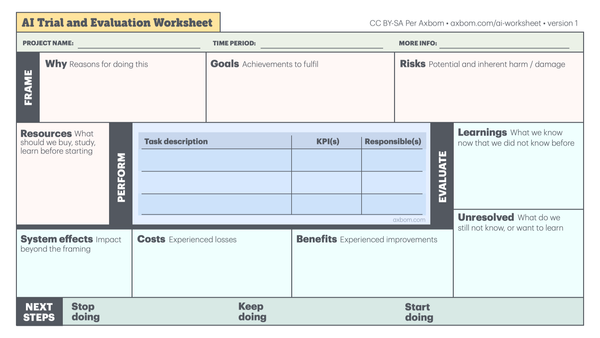

The chart can be used as an artefact to spark discussion and serve as a reference when doing risk assessments. I also use it to plan lessons for my courses.

Sometimes the sheer number of elements can feel overwhelming and here are two ways of breaking it down:

1. One practical way of using the chart in workshop format is to have each working group select 3-4 elements, each from a different section, and encourage discussion around what examples of digital harm that particular combination brings to mind.

2. We can also start with identifying different services that we use every day and pick from the chart to identify both obvious and not-so-obvious elements that apply within our context of use.

If you find other ways to use the chart in your work that you wish to share, do let me know in the comments or by contacting me.

High-resolution image and PDF

Find the latest high-resolution chart (PNG) and PDF in my Dropbox here:

Background

I have spent the last five years reading, teaching and having conversations around all the things that lead to negative impact in digital. I do this to become better at building and designing digital products that promote wellbeing and mitigate harm. It's not about hindering digital progress, it's about choosing the best direction for it. You can freely access my research collection of Digital Ethics links – it's continuously updated . I also provide a list of inspirational books on Digital ethics and sustainability.

I created and maintain The Elements of Digital Ethics diagram to summarize many of the learnings and insights from my research. I also talk at conferences and events, as well as teach workshops, to spread awareness on digital ethics.

This chart is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

Cite "Per Axbom" as the creator and link to this web page when applicable.

Subscribe to the newsletter to be alerted when the chart is significantly updated.

More voices

Webmentions

Member discussion