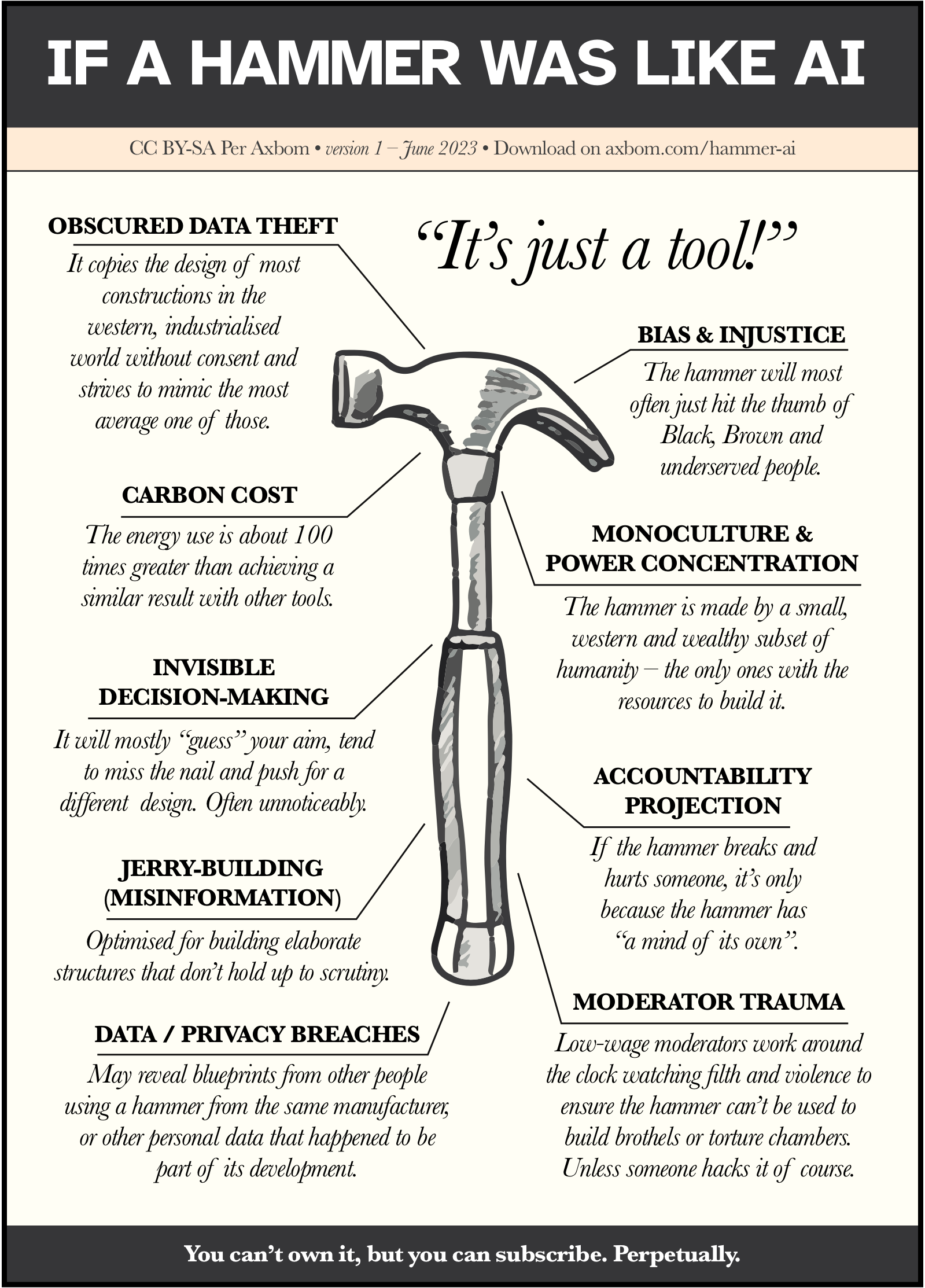

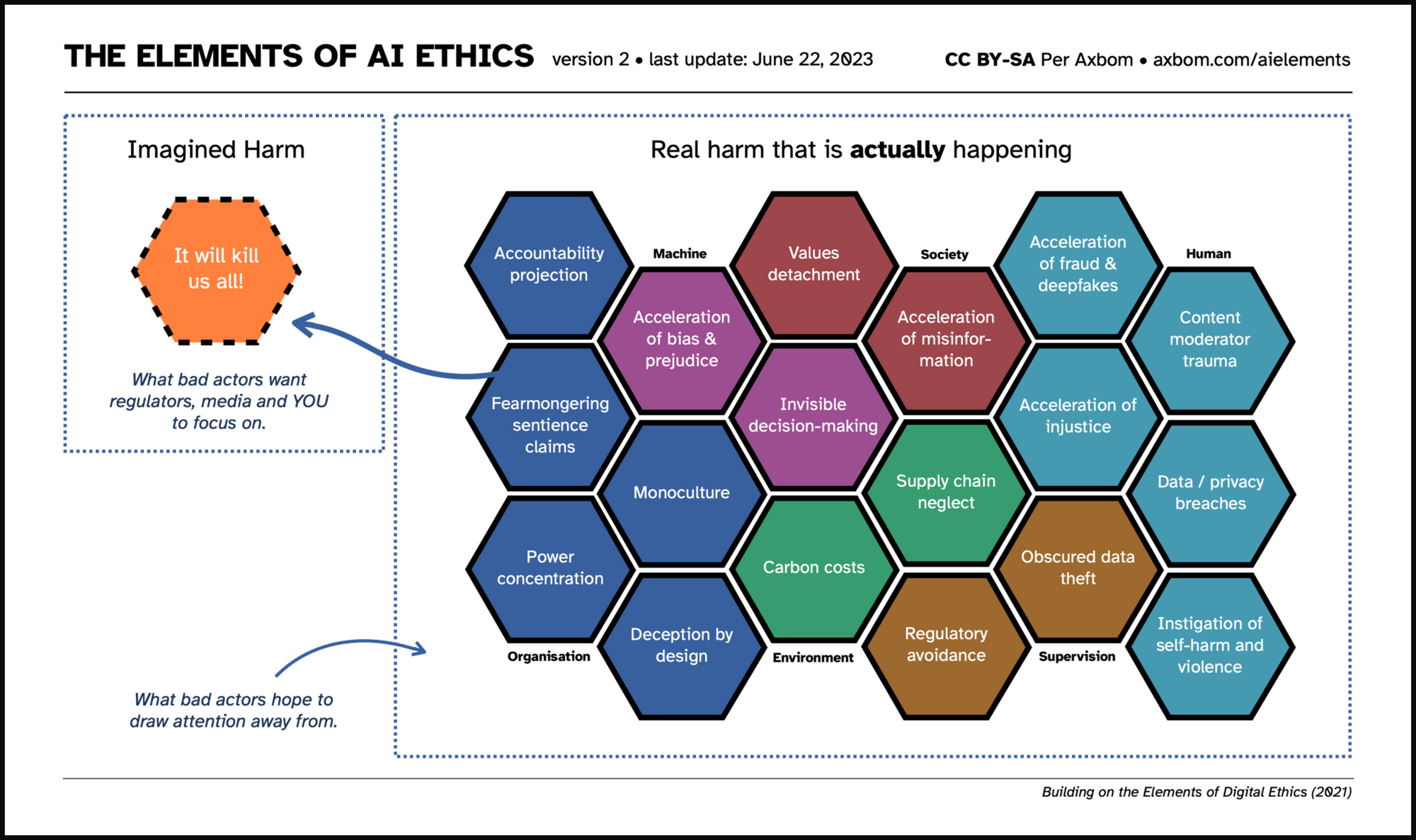

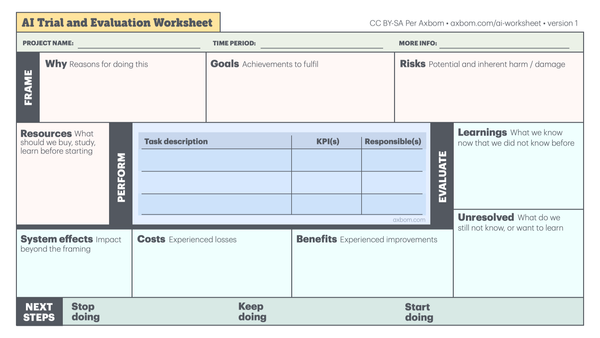

The harmful effects of technology can be managed and mitigated. But not if we do not talk about them. Nor if we allow ourselves to be misled to focus on misleading narratives. In the chart The Elements of AI Ethics I map out harms that we are already seeing reports of, that have been ongoing and many of which were predicted before they happened. As a tool it can provide guidance and talking points for understanding how to prioritise your work with "smart" tools, and acknowledge that all teams that deploy or make use of AI need a mitigation strategy for many different types of harms.

This chart builds on The Elements of Digital Ethics (2021). The harms in the original diagram are all still relevant, and what this chart does is provide a focused overview of the types of harm we are seeing proliferate with the ongoing advancement of AI within different industries, and especially general-purpose and generative tools. You can refer to the original chart to get an idea of how a tool like this can be used in education and project work.

The following are brief summaries of each element to give you a better understanding of what they refer to. I am also putting together a workshop and talk around these elements. If that is something that would interest you and your organisation, let me know.

Download as PDF

The Elements – Brief summaries

There are six overarching sections (Organisation, Machine, Society, Human, Supervision and Environment), and at a total of 18 elements in the chart.

Organisation

These elements apply to the qualities and concerns of organisations that develop AI-enabled services and products in both the private and public sector.

Accountability projection

The term accountability projection refers to how organisations have a tendency not only to evade moral responsibility but also to project the very real accountability that must accompany the making of products and services. Projection is borrowed from psychology and refers to how manufacturers, and those who implement AI, wish to be free from guilt and project their own weakness onto something else – in this case the tool itself.

The framing of AI appears to give manufacturers and product owners a "get-out-of-jail free card" by projecting blame onto the entity they have produced, as if they have no control over what they are making. Imagine buying a washing machine that shrinks all your clothes, and the manufacturer being able to evade any accountability by claiming that the washing machine has a "mind of its own".

Machines aren't unethical, but the makers of machines can act unethically.

This is very similar to "Moral outsourcing" as coined by Dr. Rumman Chowdhury. It refers to how makers of AI manage to defer moral decision-making to machines, as if they themselves have no say in the output.

Monoculture

Today, there are nearly 7,000 languages and dialects in the world. Only 7% are reflected in published online material. 98% of the internet’s web pages are published in just 12 languages, and more than half of them are in English. When sourcing the entire Internet, that is still a small part of humanity.

76% of the cyber population lives in Africa, Asia, the Middle East, Latin America and the Caribbean, most of the online content comes from elsewhere. Take Wikipedia, for example, where more than 80% of articles come from Europe and North America.

Now consider what content most AI tools are trained on.

Here is what i originally wrote about this element:

Through the lens of a small subset of human experience and circumstance it is difficult to envision and foresee the multitudes of perspectives and fates that one new creation may influence. The homogenity of those who have been provided the capacity to make and create in the digital space means that it is primarily their mirror-images who benefit – with little thought for the wellbeing of those not visible inside the reflection.

Deception by design

Many AI tools are consciously built to provide the illusion of talking with something that is thinking, considering or even being remorseful. These design decisions actually feed into the dangerous mindset of regarding the tools as sentient beings. As mentioned, this allows manufacturers to evade responsibility, but it also contributes to complex trust and relationship issues that humans have previously only had with actually sentient beings. We know very little about what it does to people's emotional life and well-being in the long term to regularly interact with something that is designed to give the appearance of having human emotions when it in fact does not.

There are many reasons to assume that people can build strong bonds to tools that produce unpredictable output. And the number of people who need professional help to manage their mental health, and instead turn to AI chatbots, is of course growing fast. Sometimes they are even encouraged to.

Power concentration

When power is with a few, their own needs and concerns will naturally be top of mind and prioritized. The more their needs are prioritized, the more power they gain. Three million AI engineers is 0.0004% of the world's population.

Fearmongering sentience claims

There is an increasing number of reports coming out to describe a future, closer every day, wherein an AI takes over the world and makes humans obsolete and expendable. It is often fuelled on by the biggest names in the industry. By using exaggerated rumors of impending danger they manage to hold attention and control the narrative.

The claims have the effects of:

- Pulling attention away from the real harms that are happening

- Boosting trust in the validity of system output because they are framed as "all-powerful beings"

- Supporting the idea of accountability outsourcing (to the machines)

- Giving more power to the doomsayers because they are the only ones who can save us, and thus also the ones who can tell lawmakers how to regulate

Imagined Harm

In the chart I have chosen to make this last point overtly clear, by showing how the element "It will kill us all!" is an imagined harm with no evidence to back it up. It's a harm in itself to push this narrative.

Machine

As more and more decision-making is automated, more humans must bow to algorithms, machine learning and obscurity. These Machine elements describe the inherent qualities of machines that exacerbate potential harm.

Acceleration of bias and prejudice

One inherent property of AI is its ability to act as an accelerator of other harms. By being trained on large amounts of data (often unsupervised) – that inevitably contains biases, abandoned values and prejudiced commentary – these will be reproduced in any output. It is likely that this will even happen unnoticeably (especially when not actively monitored) since many biases are subtle and embedded in common language. And at other times it will happen quite clearly, with bots spewing toxic, misogynistic and racist content.

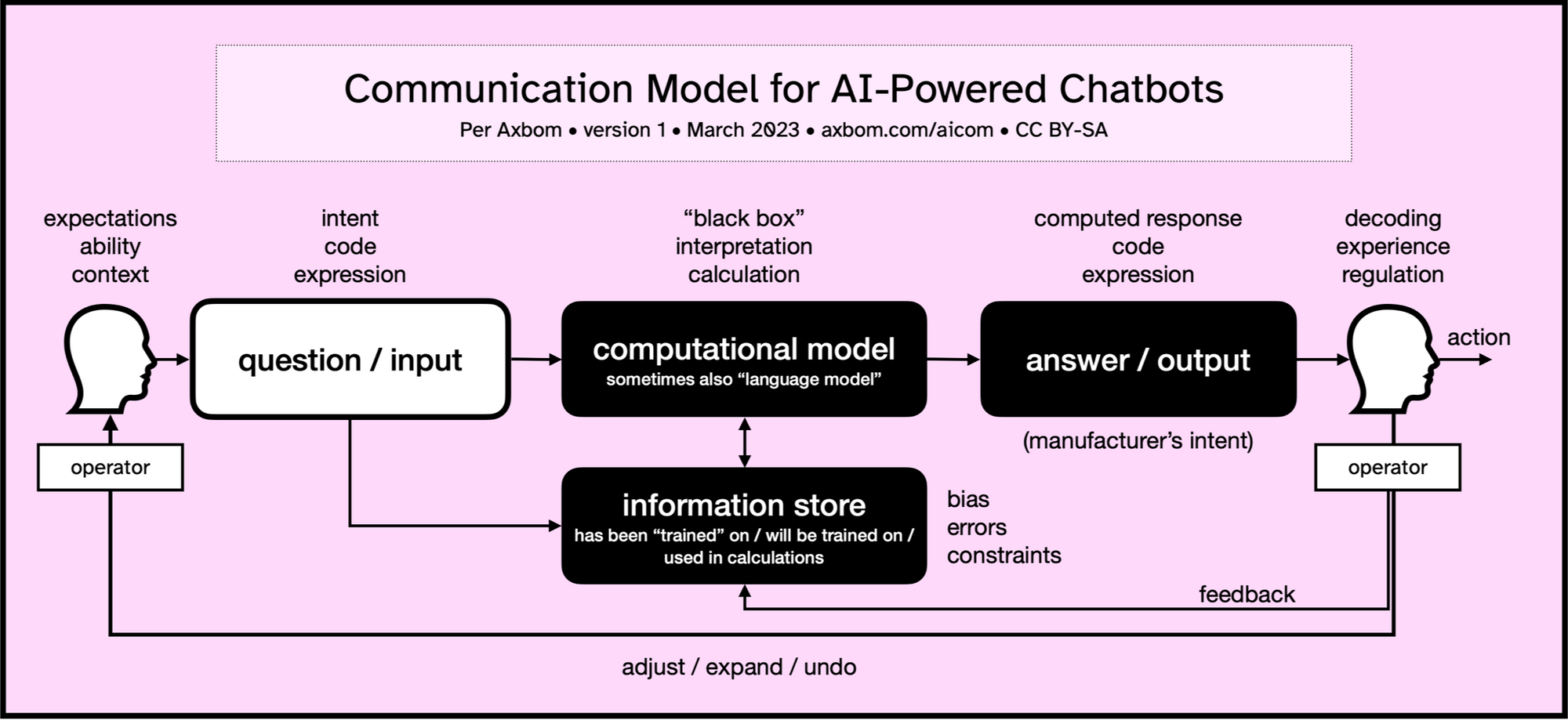

Invisible decision-making

The more complex the algorithms become, the harder they are to understand. As more people are involved, time passes, integrations with other systems are made and documentation is faulty, the further they deviate from human understanding. Many companies will hide proprietary code and evade scrutiny, sometimes themselves losing understanding of the full picture of how the code works. Decoding and understanding how decisions are made will be open to infinitely fewer beings.

And it doesn't stop there. This also affects autonomy. By obscuring decision-making processes (how, when, why decisions are made, what options are available and what personal data is shared) it is increasingly difficult for individuals to make properly informed choices in their own best interest.

Society

The advent of digital has repercussions for society at large, influencing value-systems, opportunity, fear and safety to name a few. Things that were not previously possible are top of mind and often framed in savior-like qualities promised by digital transformation (and today most often by AI). New-found abilities and efforts to record and measure everything in numbers afford less attention to spirituality, compassion and autistic sensitivity.

Values detachment

Efficiency that involves handing over certain tasks to machines necessarily means that we give something up. We detach something. While it is often argued that we are giving up the mundande and tedious to live more happy and enriched lives this is not always the case. We need to keep an eye on what we are losing when we are gaining something else. By deferring even decision-making to machines (that were built by someone else and trained on an obscure mass of content) many people are incentivized to giving up doing work that may have been more important than the decisions themselves, e.g. speculation, reasoning and rumination. What we value changes and it is not obvious that we are handing over even the concept of our own value-based decision-making to an external party.

If societal values change over time, how will they change once they are part of the machine's inner workings?

Acceleration of misinformation

The one harm that most everyone appears to be in agreement about is the abundance of misinformation that many of these tools will allow for at virtually no cost to bad actors. This is of course already happening. There is both the type of misinformation that troll farms now can happily generate by the masses and with perfect grammar in many languages, and also the type of misinformation that proliferates with the everyday use of these tools, and often unknowingly.

This second type of misinformation is generated and spread by the highest of educated professionals within the most valued realms of government and public services. The tools are just that good at appearing confident when expressing nonsense in believable, flourished and seductive language.

One concern that does come up and is still looking for an answer is what will happen when the tools now begin to be "trained" with the texts that they themselves have generated.

Human

While most aspects of AI ethics are related to negative impact on human wellbeing, certain elements hit closer to home and are more direct and tangible in how they impact human wellbeing.

Acceleration of fraud & deepfakes

Tools that generate believable content with convincing wording, or tools that can imitate your voice or even your likeness, open up the playing field for fraudulent activities that can be harmful to mind, economy, reputation and relationships. People can be made to believe things about others that simply are not true, or even fall prey to the illusion that a family member is talking to them over the phone even when it's a complete stranger.

Acceleration of injustice

Because of systemic issues and the fact that bias becomes embedded in these tools, these biases will have dire consequences for people who are already disempowered. Scoring systems are often used by automated decision-making tools and these scores can for example affect job opportunities, welfare/housing eligibility and judicial outcomes.

Content moderator trauma

In order for us to avoid seeing traumatitizing content when using many of these tools (such as physical violence, self-harm, child abuse, killings and torture) this content needs to be filtered out. In order for it to be filtered out, someone has to watch it. As it stands, the workers who perform this filtering are often exploited and suffer PTSD without adequate care for their wellbeing. Many of them have no idea what they are getting themselves into when they start the job.

Data / Privacy breaches

There are several ways personal data makes its way into the AI tools. First, since the tools are often trained on data available online and in an unsupervised manner, personal data will actually make its way into the workings of the tools themselves. Data may have been inadvertently published online or may have been published for a specific purpose, rarely one that supports feeding an AI system and its plethora of outputs. Second, personal data is actually entered into the tools by everyday users through negligent or inconsiderate use – data that at times is also stored and used by the tools. Third, when many data points from many different sources are linked together in one tool they can reveal details of an individuals’ life that any single piece of information could not.

Instigation of self-harm and violence

There have been several witness reports of AI chatbots encouraging or endorsing a human operator's abuse towards themselves or others. Will manufacturers be able to provide a guarantee as to the complete avoidance of this in the future? And if not, what other mitigating factors need to be designed into the tool?

Supervision

This section relates to the difficult practice of overseeing, regulating and managing the potential negative impact related to AI.

Obscured data theft

To become as "good" as they are in their current form, many generative AI systems have been trained on vasts amount of data that were not intended for this purpose, and whose owners and makers were not asked for consent. The mere publishing of an article or image on the web does not imply it can be used by anyone for anything. Generative AI tools appear to be getting away with ignoring copyright.

At the same time there are many intricacies that law- and policymakers need to understand to do a good job of reasoning around infringements on rights or privileges. Images are for example not duplicated and stored, but rather used to feed a computational model, which is an argument often used by defenders to explain how generative AI is only "inspired" by the works of others and not "copying". There are several ongoing legal cases challenging this view.

Great walkthrough of how generative AI steals copyrighted content, and how it does actually store content as well.

Regulatory avoidance

AI can be seen as avoiding regulations by the sheer amount of oversteps being performed through their deployment and use. One possibility is that misuse of other people's content may have to be normalised because oversight is unattainable, unless more constraints are quickly placed on "data theft", deployment and use. The fact that so many of these companies can release tools based on the works of others, without having to disclose what they are based on, can only be seen as a moral dilemma of vast proportions. Moreover, what personal data is hidden to be abused or misused in future output is not only unknown to the operators of the tools, but to a large extent also unknown even to the makers of the tools themselves.

The fact that nations have different laws brings new obstacles to implementation across borders. OpenAI CEO Sam Altman for example expressed that he would pull out of the EU if he has to abide by proposed regulation. [update: Two days later he backpedalled those threats.]

Environment

The elements in this section refer to problems that are created and/or overlooked during the making of digital tools. They are often ignored because they are considered secondary conditions and do not relate directly to the intent of what is being created. Sometimes seen as untouchable or unavoidable, these elements still need to be addressed or managed to mitigate negative impact.

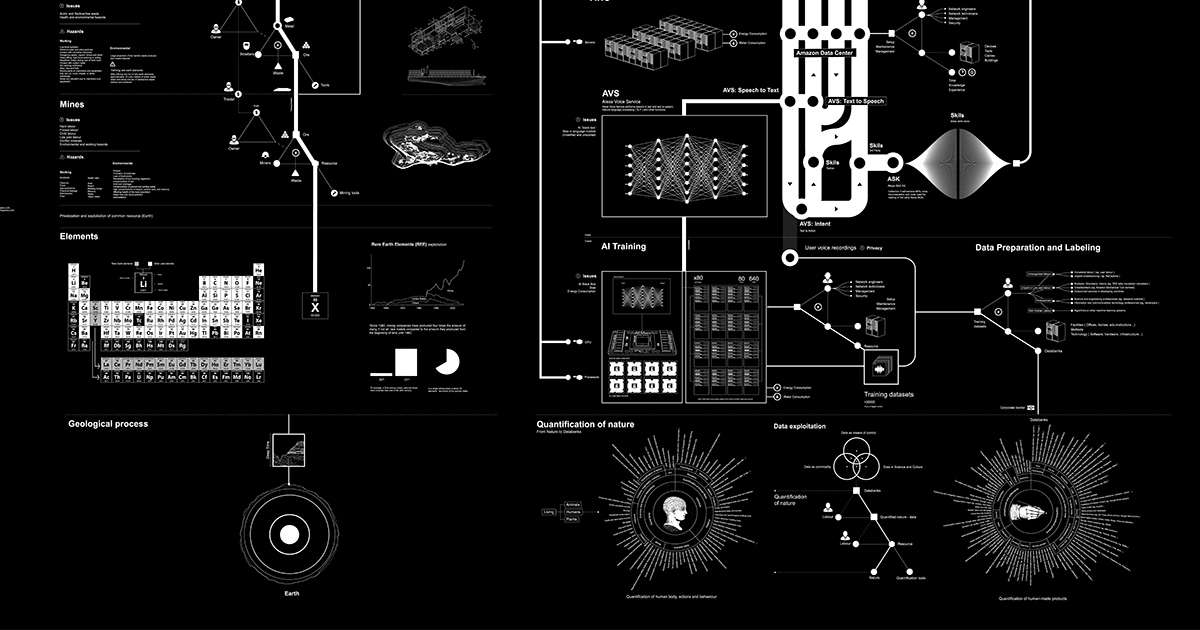

Supply chain neglect

To realise digital services and solutions we need both software and hardware. Behind the production of these there can be any number of oppressive relationships and exploitation of workers. One example is the need for cobalt, used in lithium batteries, which is often mined under unfair conditions in oppressive environments reminiscent of slavery. Failing to consider one's own part in the supply chain required to deploy digital services is to ignore accountability for potential harm to others.

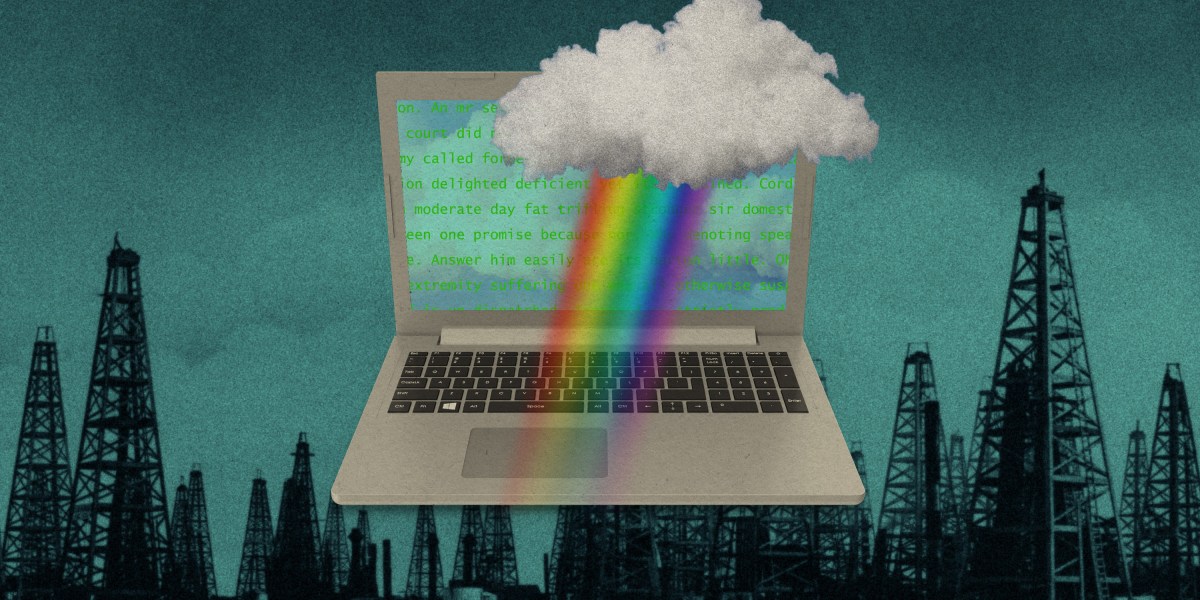

Carbon costs

The energy required to source data, train the models, power the models and compute all the interactions with these tools is significant. While exact figures are often kept under wraps, there have been many studies into the large environmental cost of developing AI and measures that need to be taken. With this in mind, it is apt to ask if every challenge really is looking for an AI solution. Will consumers perhaps come to see the phrase "AI-Powered System" in the same light as "Diesel-Powered SUV".

Update December 5, 2023: New research shows that ”Generating 1,000 images with a powerful AI model, such as Stable Diffusion XL, is responsible for roughly as much carbon dioxide as driving the equivalent of 4.1 miles in an average gasoline-powered car.”

The hopeful part

The reason I want to contribute to the understanding of the types of harm entangled with the growth of AI is because all the harms are under human control. This means that we can talk about them and address them. We can work to evade or minimize these harms. And we need to demand transparency from manufacturers around each issue. Rather than pretending new technology is always benevolent because it will "improve lives" (definitely some lives more than others), surfacing these conversations is a good thing.

If you agree, I always appreciate when you can help me by sharing my posts and tools in your own networks. And I am always happy to hear about your own thoughts, perspectives and experiences. 😊

Further References and Reading

- Fast Company: Researcher Meredith Whittaker says AI’s biggest risk isn’t ‘consciousness’—it’s the corporations that control them

- DAIR Institute: Statement from the listed authors of Stochastic Parrots on the “AI pause” letter

- On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? 🦜

- Situating Search

Member discussion